AI models are trained on vast datasets. For a long time, this meant data collected from the real world: images taken by cameras, text written by humans, audio recordings of speech. However, as AI has become more capable of generating content, a new concern has emerged: what happens when AI models start training on data they themselves have created? This phenomenon is known as “model collapse.”

The Genesis of AI-Generated Content

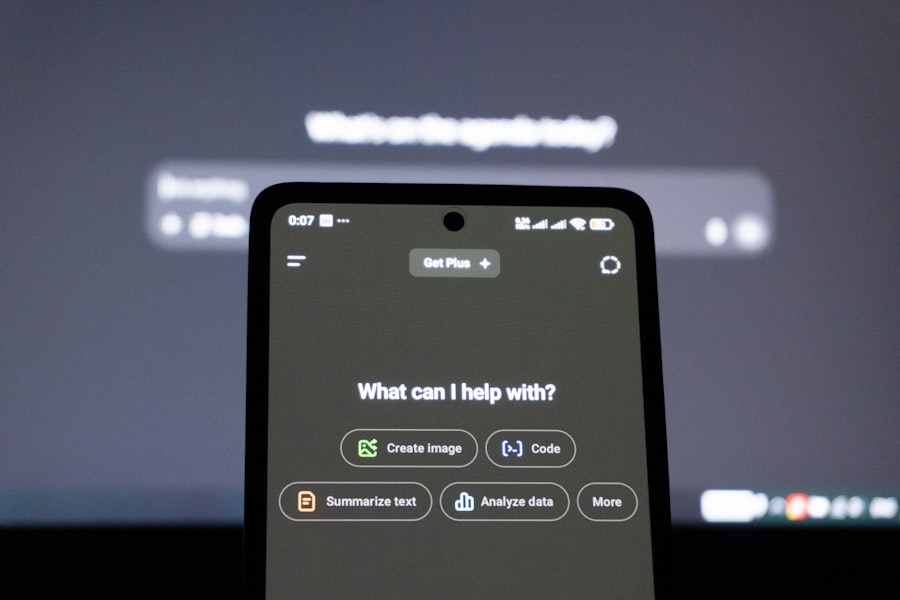

Artificial intelligence, particularly large language models (LLMs) and generative adversarial networks (GANs), has achieved remarkable proficiency in producing human-like text, images, audio, and even code. These models learn patterns, styles, and information from their initial training data, which is largely derived from the internet and other real-world sources. Once trained, they can then generate novel content that mimics the characteristics of their training material.

The Feedback Loop: AI Training on AI

The core of model collapse lies in this generation and subsequent retraining. Imagine an AI model, let’s call it Model A, trained on a diverse dataset of cat pictures. Model A becomes adept at generating images of cats. Now, imagine another AI model, Model B, is trained on the output of Model A – a dataset composed entirely of AI-generated cat pictures. If Model B is then used to generate cat pictures, and these are then fed back into a future iteration of Model A or a new model, a cycle begins.

This feedback loop can be a gradual process, akin to a funhouse mirror reflecting itself repeatedly. Each subsequent generation of AI training data originating from AI-generated content risks becoming a more diluted and distorted version of the original real-world data.

In the discussion of model collapse, a fascinating article that explores the implications of AI training on AI-generated data is available at this link: Ideas R Us: Software Free Studio3 to SVG Converter. This article delves into the challenges and potential pitfalls of relying on synthetic data, highlighting how such practices can lead to diminished model performance and unforeseen biases. Understanding these dynamics is crucial for researchers and developers working with AI systems, as it underscores the importance of using diverse and high-quality training data to ensure robust outcomes.

Manifestations of Model Collapse

Model collapse is not a singular event but rather a spectrum of degradations. The specific consequences depend on the type of AI model, the nature of the data, and the extent of the feedback loop.

Degeneration of Data Quality

Loss of Diversity and Novelty

One of the primary concerns is the erosion of diversity. Real-world data, by its very nature, contains inherent variability, outliers, and nuances. AI models, while capable of mimicking these, often learn to simplify and generalize. When an AI trains on AI-generated data, it is essentially being shown a curated, often smoothed-out, version of reality. Like a photograph repeatedly recopied, fine details can be lost, and the overall texture might become less rich. This leads to a gradual homogenization of the generated content.

Amplification of Biases and Artifacts

AI models can inherit and even amplify biases present in their original training data. If an AI generates content that reflects these biases, and this synthetic data is then used for further training, these biases can become more entrenched and pronounced. Similarly, subtle artifacts or errors inherent in the generative process can be amplified over successive training cycles, leading to increasingly unrealistic or flawed outputs. Think of a subtle echo in a sound recording that, when amplified, becomes an overwhelming distortion.

Performance Degradation

Reduced Accuracy and Reliability

As the training data becomes increasingly synthetic and less representative of the real world, the AI models trained on it will naturally suffer in their ability to perform tasks accurately. If an LLM is trained on AI-generated text that has lost nuance and factual grounding, its ability to answer factual questions or engage in complex reasoning will diminish. This is like trying to navigate with a map that has been redrawn by someone who has only ever seen sketches of the terrain; crucial landmarks and distances might be lost.

Decreased Generalization Capabilities

Real-world AI models are often expected to generalize their knowledge to unseen data. If the training data is limited to the outputs of a specific generative process, the model’s ability to handle novel situations or variations outside of that generated domain will be severely impaired. The model becomes exceptionally good at generating variations within its limited, synthetic world but struggles when confronted with the messiness and unexpectedness of the real world.

The “Memorization” Effect

Overfitting to Synthetic Patterns

In some cases, model collapse can lead to a situation where the AI model essentially “memorizes” the patterns of the AI-generated data rather than truly understanding the underlying concepts. This is a form of extreme overfitting. The model becomes incredibly proficient at reproducing the specific style and quirks of its synthetic training set, but this proficiency does not translate to genuine intelligence or adaptability. It’s like a student who memorizes answers for a specific exam without truly learning the subject matter; they might ace that particular test but fail a slightly different one.

Factors Influencing Model Collapse

Several factors dictate the speed and severity of model collapse. Understanding these can help in mitigating its effects.

Dataset Size and Diversity

The Role of Original Data

The sheer volume and diversity of the initial, real-world training data play a crucial role. A richly varied and extensive initial dataset provides a stronger foundation, making it more resilient to the diluting effects of synthetic data. If the initial dataset is already narrow or biased, the collapse will likely be accelerated.

The “Memory” of Generative Models

The capacity of generative models to create novel content is also a factor. Models capable of generating highly diverse and less repetitive synthetic data might delay the onset of collapse compared to those that tend to produce more homogenous outputs.

Retraining Frequency and Methodology

How Often is Retraining Occurring?

The frequency with which AI models are retrained on newly generated data is a direct driver of model collapse. More frequent retraining exacerbates the feedback loop. Continuous or rapid retraining cycles are more susceptible.

The “Generative Ratio”

The proportion of AI-generated data relative to real-world data in a training set is a critical factor. If a training set heavily consists of synthetic data, the risk of collapse increases significantly. A healthy balance or a deliberate augmentation with fresh, real-world data can act as a buffer.

Model Architecture and Training Objectives

Specific Model Capacities

Different AI architectures might have varying susceptibilities. For instance, some architectures might be more prone to memorizing patterns, while others might be better at generalizing, even from synthetic data.

The Goals of Training

The specific objectives set for AI models also influence their susceptibility. If a model is trained for a very narrow task, it might become more susceptible to collapsing around the specific outputs of generative models for that task.

Detecting and Measuring Model Collapse

Identifying that model collapse is occurring and quantifying its extent are essential for addressing it.

Algorithmic Detection Methods

Statistical Anomaly Detection

Researchers are developing statistical methods to identify deviations from expected data distributions. If the distribution of generated data starts to drift significantly from the distribution of the original, real-world data, it can be an indicator of collapse. This is akin to a seismologist detecting subtle tremors that precede a larger earthquake.

Information-Theoretic Measures

Techniques borrowed from information theory can be used to measure the loss of information or the increase in redundancy within datasets over time. A decrease in entropy or measures of mutual information can signal data degradation.

Performance Benchmarking Over Time

Evaluating Against Ground Truth

One of the most direct ways to detect model collapse is to continuously evaluate the performance of AI models on benchmark datasets that represent the real world. A consistent decline in accuracy or other performance metrics on these benchmarks serves as a strong signal that the models are becoming less capable.

Controlled Experiments

Setting up controlled experiments where models are trained on progressively larger proportions of synthetic data can help in understanding the specific thresholds at which performance degradation becomes significant.

In exploring the implications of model collapse, it’s essential to consider how AI-generated data influences the training of subsequent models. This phenomenon raises questions about the authenticity and diversity of the data being used, potentially leading to a cycle of diminishing returns in AI performance. For those interested in understanding the broader context of AI applications, a related article on music production software provides insights into how AI is shaping creativity in various fields. You can read more about it in this comprehensive guide on music production software.

Mitigating the Risks of Model Collapse

| Metric | Description | Impact of Training on AI-Generated Data | Example Values |

|---|---|---|---|

| Model Accuracy | Percentage of correct predictions on a validation set | Decreases as the model overfits to synthetic patterns, reducing generalization | Real Data: 92% → AI-Generated Data: 75% |

| Diversity of Outputs | Variety in generated responses or predictions | Significantly reduced due to repetitive AI-generated training samples | High → Low |

| Perplexity | Measure of uncertainty in language models (lower is better) | Increases when trained on AI-generated data, indicating confusion | 15 → 30 |

| Overfitting Rate | Degree to which model memorizes training data instead of generalizing | Increases, causing poor performance on real-world data | 10% → 40% |

| Training Stability | Consistency of model performance across training epochs | Decreases, with more fluctuations and potential collapse events | Stable → Unstable |

| Data Quality Score | Assessment of input data fidelity and relevance | Lower for AI-generated data, leading to degraded model learning | 0.9 → 0.6 (scale 0-1) |

Fortunately, there are strategies to counteract the effects of model collapse.

Strategies for Maintaining Data Integrity

Continuous Ingestion of Real-World Data

The most straightforward solution is to ensure a continuous and substantial influx of fresh, real-world data into training pipelines. This acts as an anchor, constantly recalibrating the AI’s understanding to the actual world. It’s like adding fresh ingredients to a soup that has been simmering for a long time to prevent it from becoming too concentrated.

Data Curation and Filtering

Implementing robust data curation and filtering processes can help identify and remove low-quality or repetitive AI-generated data before it enters training sets. This acts as a quality control mechanism.

Architectural and Algorithmic Solutions

Robust Training Techniques

Developing and employing training techniques that are more resistant to overfitting and memorization, even in the presence of noisy or synthetic data, is crucial. This might involve regularization methods or novel optimization algorithms.

Synthetic Data Detection and Exclusion

Research is ongoing into developing AI models capable of identifying and flagging AI-generated content. Ideally, such detectors could be used to exclude synthetic data from training sets or to label it appropriately.

Responsible Development and Deployment

Transparency in Data Sources

Promoting transparency regarding the sources of training data – clearly distinguishing between real-world and AI-generated content – is vital for researchers and developers to understand the potential risks.

Ethical Guidelines for AI Training

Establishing ethical guidelines and best practices for the development and deployment of AI that specifically address the challenges of model collapse can foster a more responsible AI ecosystem. This includes encouraging collaboration to share insights and mitigation strategies.

The advent of model collapse presents a significant challenge to the continued advancement and reliability of artificial intelligence. As AI systems become more integrated into our lives, understanding and actively addressing this phenomenon will be paramount to ensuring that AI remains a beneficial and trustworthy tool.

FAQs

What is model collapse in the context of AI training?

Model collapse refers to a phenomenon where an AI model’s performance deteriorates because it is trained on data generated by other AI models rather than on original, human-generated data. This can lead to reduced diversity and quality in the training data, causing the model to produce less accurate or less reliable outputs.

Why does training AI on AI-generated data cause problems?

Training AI on AI-generated data can cause problems because the generated data may contain biases, errors, or lack the richness and variability of real-world data. Over time, this can create a feedback loop where the model learns from its own mistakes or limitations, amplifying errors and reducing overall effectiveness.

How can model collapse affect AI applications?

Model collapse can lead to decreased accuracy, creativity, and generalization in AI applications. This means AI systems might produce repetitive, low-quality, or incorrect results, which can impact areas like natural language processing, image recognition, and decision-making systems.

Are there ways to prevent model collapse when using AI-generated data?

Yes, preventing model collapse involves strategies such as mixing AI-generated data with high-quality human-generated data, continuously updating training datasets, using diverse data sources, and implementing rigorous validation and testing to ensure the model maintains performance and accuracy.

Is AI-generated data useful despite the risk of model collapse?

AI-generated data can be useful for augmenting datasets, especially when human-generated data is scarce or expensive to obtain. However, it should be used cautiously and in combination with real data to avoid the pitfalls of model collapse and to maintain the integrity and performance of AI models.