Decentralized data networks represent a significant shift in how data is stored, managed, and utilized. Unlike traditional centralized systems, where data is controlled by a single entity, decentralized networks distribute data across multiple nodes. This architecture enhances resilience and reduces the risk of single points of failure. The rise of blockchain technology and peer-to-peer networking has facilitated the development of these systems, allowing for greater transparency and democratization of data access. As organizations increasingly recognize the limitations of centralized data management, decentralized networks are gaining traction across various sectors.

The implications of decentralized data networks extend beyond mere data storage. They enable new paradigms for collaboration, data sharing, and application development. By allowing multiple stakeholders to contribute to and access a shared dataset, these networks foster innovation and can lead to more robust AI applications. The integration of artificial intelligence (AI) into decentralized frameworks presents unique opportunities and challenges, particularly in how models are trained and deployed. Understanding the dynamics of decentralized data networks is crucial for leveraging their potential in AI development.

In the evolving landscape of artificial intelligence, the training of AI models on decentralized data networks is a significant advancement that enhances data privacy and accessibility. For a deeper understanding of how innovative tools can optimize your digital strategies, you might find the article on the best group buy SEO tools particularly insightful. It explores premium tools that can complement your AI initiatives and improve your online presence. You can read more about it here: 2023 Best Group Buy SEO Tools.

Key Takeaways

- Decentralized data networks enable distributed data storage and processing without central control.

- AI models leverage decentralized networks to access diverse and large-scale datasets for improved learning.

- Training AI on decentralized data faces challenges like data heterogeneity, communication overhead, and privacy concerns.

- Solutions include federated learning, secure multi-party computation, and blockchain integration to enhance training efficiency and security.

- Benefits include improved data privacy, robustness, scalability, and democratization of AI development.

The Role of AI Models in Decentralized Data Networks

AI models play a pivotal role in decentralized data networks by enabling intelligent decision-making based on distributed datasets. These models can analyze vast amounts of information from various sources, providing insights that would be difficult to obtain through traditional means. In a decentralized context, AI can enhance the efficiency of data processing and improve the accuracy of predictions by leveraging diverse datasets that reflect a broader range of perspectives and conditions. This capability is particularly valuable in fields such as healthcare, finance, and supply chain management, where data is often siloed within organizations.

Moreover, AI models can facilitate real-time analytics in decentralized networks, allowing for immediate responses to changing conditions. For instance, in a decentralized supply chain network, AI can optimize logistics by analyzing data from multiple suppliers and distributors simultaneously. This not only improves operational efficiency but also enhances the overall responsiveness of the network. As AI continues to evolve, its integration into decentralized frameworks will likely lead to more sophisticated applications that can adapt to complex environments and user needs.

Challenges of Training AI Models on Decentralized Data Networks

Training AI models on decentralized data networks presents several challenges that must be addressed to fully realize their potential. One significant issue is the heterogeneity of data sources. In a decentralized environment, data may come from various formats, structures, and quality levels, making it difficult to create a unified training dataset. This variability can lead to inconsistencies in model performance and complicate the training process. Additionally, the lack of centralized control can result in difficulties in ensuring data quality and integrity.

Another challenge is the computational resource distribution across nodes in a decentralized network. Unlike centralized systems where resources can be pooled effectively, decentralized networks may have limited processing power at individual nodes. This fragmentation can slow down the training process and complicate the implementation of complex algorithms that require substantial computational resources. Furthermore, communication latency between nodes can hinder the efficiency of model training, as frequent updates and synchronization are necessary for effective learning.

Solutions for Training AI Models on Decentralized Data Networks

To overcome the challenges associated with training AI models on decentralized data networks, several solutions have been proposed. One approach is federated learning, which allows models to be trained across multiple devices without requiring raw data to be centralized. In this framework, each node trains a local model using its own data and then shares only the model updates with a central server or other nodes. This method preserves data privacy while enabling collaborative learning across the network.

Another solution involves the use of advanced algorithms designed specifically for decentralized environments. Techniques such as differential privacy can help ensure that individual data points remain confidential while still contributing to model training. Additionally, employing robust aggregation methods can enhance the accuracy of model updates by effectively combining insights from diverse sources. These strategies not only address privacy concerns but also improve the overall performance of AI models trained in decentralized settings.

In exploring the innovative landscape of AI, a fascinating article discusses the implications of decentralized data networks for model training. This piece highlights how such networks can enhance data privacy and security while improving the efficiency of AI systems. For those interested in understanding the broader context of technology and its impact on businesses, you can read more about it in this insightful review on Screpy. Check it out here.

Benefits of Training AI Models on Decentralized Data Networks

| Metric | Description | Typical Values | Impact on AI Training |

|---|---|---|---|

| Number of Nodes | Count of decentralized devices or servers participating in training | 10 – 10,000+ | Higher number increases data diversity and robustness |

| Data Volume per Node | Amount of data stored locally on each node (in GB) | 1 GB – 500 GB | More data per node improves local model accuracy |

| Communication Rounds | Number of iterations of model updates exchanged between nodes and central server | 10 – 1000 | More rounds improve model convergence but increase latency |

| Model Update Size | Size of model parameters sent per communication round (in MB) | 1 MB – 100 MB | Smaller updates reduce bandwidth usage |

| Latency per Round | Time delay for one communication round (in seconds) | 0.5 – 10 seconds | Lower latency speeds up training process |

| Data Privacy Level | Degree of privacy preservation (e.g., differential privacy, encryption) | High, Medium, Low | Higher privacy may reduce data utility but protects user data |

| Model Accuracy | Performance metric of the trained AI model (e.g., accuracy percentage) | 70% – 95% | Higher accuracy indicates better learning from decentralized data |

| Energy Consumption | Energy used per training round (in kWh) | 0.1 – 5 kWh | Lower energy consumption is desirable for sustainability |

Training AI models on decentralized data networks offers several advantages that can enhance both model performance and user experience. One key benefit is improved data diversity. By leveraging data from multiple sources, models can be trained on a more comprehensive dataset that captures a wider range of scenarios and conditions. This diversity can lead to more accurate predictions and better generalization when applied to real-world situations.

Additionally, decentralized training can enhance privacy and security for users. Since raw data does not need to be shared or centralized, individuals retain greater control over their information. This aspect is particularly important in sensitive domains such as healthcare or finance, where data breaches can have severe consequences. By minimizing the exposure of personal data during the training process, decentralized networks can foster greater trust among users and encourage participation in collaborative AI initiatives.

As the landscape of artificial intelligence continues to evolve, the importance of decentralized data networks becomes increasingly clear. A fascinating article that delves into the implications of this technology can be found here, where it explores how various industries are adapting to these advancements. By leveraging decentralized data, AI models can enhance their training processes, leading to more robust and reliable outcomes. This shift not only democratizes data access but also fosters innovation across multiple sectors.

Security and Privacy Considerations in Decentralized Data Networks

Security and privacy are paramount concerns in decentralized data networks, especially when integrating AI models that rely on sensitive information. The distributed nature of these networks introduces unique vulnerabilities that must be addressed to protect user data effectively. One major concern is the potential for malicious actors to exploit weaknesses in individual nodes or communication channels to gain unauthorized access to sensitive information.

To mitigate these risks, robust encryption methods are essential for securing data both at rest and in transit. Implementing end-to-end encryption ensures that only authorized parties can access the information being shared within the network. Additionally, employing blockchain technology can enhance security by providing an immutable record of transactions and interactions within the network. This transparency helps build trust among participants while also enabling traceability in case of security breaches.

Case Studies of Successful AI Model Training on Decentralized Data Networks

Several case studies illustrate the successful application of AI model training on decentralized data networks across various industries. One notable example is in healthcare, where federated learning has been employed to train predictive models using patient data from multiple hospitals without compromising patient privacy. By collaborating on model development while keeping sensitive health information local, healthcare providers have been able to improve diagnostic accuracy and treatment recommendations.

Another example comes from the financial sector, where decentralized networks have been used to enhance fraud detection systems. By aggregating transaction data from various financial institutions while maintaining strict privacy controls, AI models have been trained to identify patterns indicative of fraudulent activity more effectively than traditional methods. These case studies demonstrate not only the feasibility of training AI models in decentralized environments but also their potential to drive innovation and improve outcomes across different fields.

Future Trends in AI Model Training on Decentralized Data Networks

Looking ahead, several trends are likely to shape the future of AI model training on decentralized data networks. One significant trend is the increasing adoption of federated learning as organizations seek to balance collaboration with privacy concerns.

As more industries recognize the value of shared insights without compromising sensitive information, federated learning frameworks will likely become more prevalent.

Additionally, advancements in edge computing may further enhance the capabilities of decentralized networks by enabling more powerful processing at individual nodes. This shift could reduce latency issues associated with model training and allow for real-time analytics even in resource-constrained environments. As technology continues to evolve, the integration of AI with decentralized data networks will likely lead to innovative applications that address complex challenges across various sectors.

In conclusion, decentralized data networks present both opportunities and challenges for training AI models. By leveraging diverse datasets while addressing security and privacy concerns, organizations can harness the power of AI in ways that were previously unattainable with centralized systems. As these technologies continue to develop, their impact on industries ranging from healthcare to finance will likely be profound, paving the way for more efficient and equitable solutions in an increasingly interconnected world.

FAQs

What are decentralized data networks in the context of AI training?

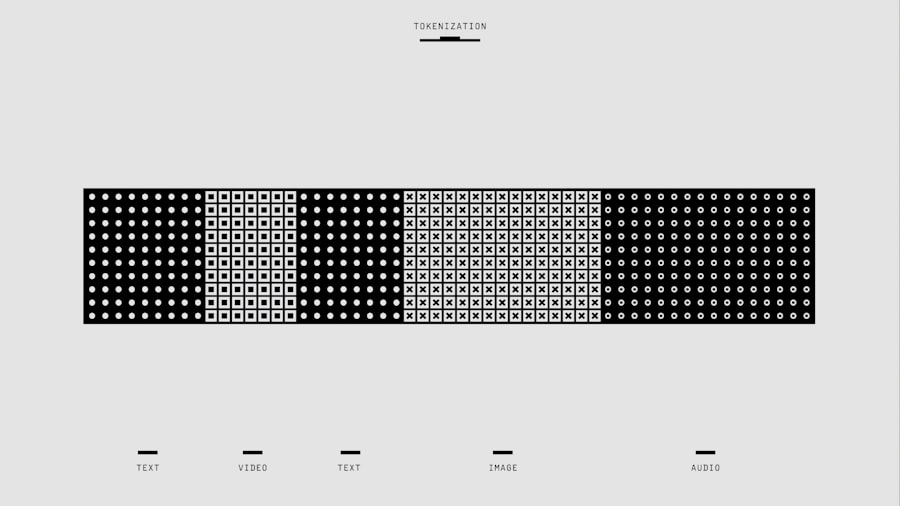

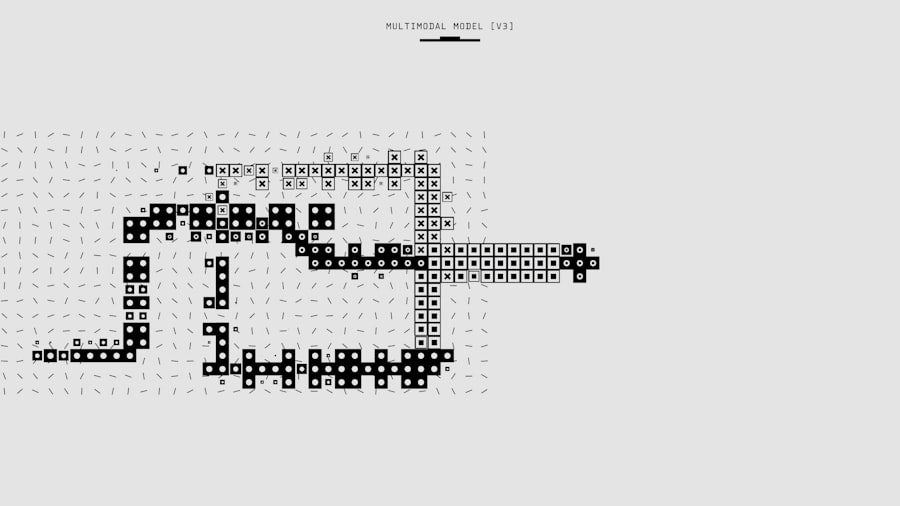

Decentralized data networks refer to systems where data is distributed across multiple nodes or locations rather than being stored in a single centralized database. This setup allows AI models to be trained on data from various sources without aggregating all data in one place.

How does training AI models on decentralized data differ from traditional centralized training?

In decentralized training, AI models learn from data stored locally on different devices or servers, often using techniques like federated learning. This contrasts with centralized training, where all data is collected and processed in a single location, potentially raising privacy and security concerns.

What are the benefits of using decentralized data networks for AI model training?

Decentralized training enhances data privacy and security by keeping sensitive information on local devices. It also reduces the need for large data transfers, lowers latency, and can leverage diverse datasets from multiple sources, potentially improving model robustness and generalization.

What challenges exist when training AI models on decentralized data networks?

Challenges include handling heterogeneous data formats, ensuring model convergence across distributed nodes, managing communication overhead, and addressing privacy and security risks such as data poisoning or model inversion attacks.

Which technologies or methods enable AI training on decentralized data networks?

Key technologies include federated learning, secure multi-party computation, differential privacy, and blockchain. These methods facilitate collaborative model training while preserving data privacy and ensuring trust among participating nodes.