Algorithmic bias refers to the systematic and unfair discrimination that can arise from the algorithms used in various automated systems. These biases often stem from the data on which the algorithms are trained, reflecting existing prejudices and inequalities present in society. For instance, if an algorithm is trained on historical data that contains biased outcomes—such as hiring practices that favor certain demographics over others—the algorithm may perpetuate these biases in its predictions or decisions.

This phenomenon is not merely a technical flaw; it is a reflection of societal values and structures that can lead to significant real-world consequences. The sources of algorithmic bias can be multifaceted. They may originate from biased data collection methods, where certain groups are underrepresented or misrepresented.

Additionally, the design of the algorithms themselves can introduce bias, particularly if the developers do not account for diverse perspectives during the creation process. For example, facial recognition technologies have been shown to have higher error rates for individuals with darker skin tones, primarily due to the lack of diverse training data. Understanding these nuances is crucial for addressing the broader implications of algorithmic bias in technology and society.

Key Takeaways

- Algorithmic bias refers to the unfair and discriminatory outcomes produced by automated systems due to biased data or flawed algorithms.

- The impact of algorithmic bias can result in perpetuating societal inequalities, reinforcing stereotypes, and limiting opportunities for certain groups.

- AI can play a crucial role in identifying bias by using techniques such as fairness metrics, bias detection algorithms, and interpretability tools.

- Mitigating bias in automated systems requires a multi-faceted approach including diverse and inclusive data collection, algorithmic transparency, and continuous monitoring for bias.

- Ethical considerations in AI and bias involve addressing issues of accountability, transparency, and the potential for harm to individuals and communities.

The Impact of Algorithmic Bias

Healthcare Inequities

In the healthcare sector, biased algorithms can lead to misdiagnoses or inadequate treatment recommendations for marginalized groups. A study revealed that an algorithm used to predict which patients would benefit from additional healthcare resources was less likely to recommend care for Black patients compared to white patients, despite similar health needs.

Bias in Criminal Justice

In the realm of criminal justice, algorithmic bias can influence sentencing and parole decisions. Predictive policing tools, which analyze crime data to forecast where crimes are likely to occur, can disproportionately target communities of color based on historical arrest data. This creates a feedback loop where increased police presence leads to more arrests in these communities, further entrenching systemic biases.

Perpetuating Cycles of Inequality

The consequences of such biases extend beyond individual cases; they can shape public policy and societal perceptions of crime and safety, perpetuating cycles of inequality.

The Role of AI in Identifying Bias

Artificial intelligence (AI) plays a dual role in the context of algorithmic bias: it can both exacerbate existing biases and serve as a tool for identifying and mitigating them. Advanced machine learning techniques can analyze vast datasets to uncover patterns of bias that may not be immediately apparent to human analysts. For example, AI can be employed to audit algorithms by examining their outputs across different demographic groups, revealing disparities in treatment or outcomes that warrant further investigation.

Moreover, AI-driven tools can assist in developing more equitable algorithms by providing insights into how different variables interact and influence outcomes. Techniques such as fairness-aware machine learning aim to create models that explicitly account for fairness constraints during the training process. By leveraging AI’s analytical capabilities, organizations can better understand the sources of bias within their systems and take proactive steps to address them before they manifest in harmful ways.

Mitigating Bias in Automated Systems

Mitigating bias in automated systems requires a multifaceted approach that encompasses data collection, algorithm design, and ongoing evaluation. One effective strategy is to ensure diverse representation in training datasets. This involves not only including a wide range of demographic groups but also ensuring that the data accurately reflects the complexities of real-world scenarios.

For instance, when developing a hiring algorithm, it is essential to include data from various industries and job roles to avoid reinforcing stereotypes about certain professions being suited for specific demographics. In addition to improving data quality, organizations must adopt best practices in algorithm design. This includes implementing fairness constraints during model training and regularly testing algorithms for biased outcomes across different groups.

Furthermore, involving interdisciplinary teams—including ethicists, sociologists, and domain experts—can provide valuable perspectives that help identify potential biases early in the development process.

Ethical Considerations in AI and Bias

The ethical implications of algorithmic bias are significant and warrant careful consideration by developers, policymakers, and society at large. At the core of these ethical concerns is the principle of fairness—ensuring that automated systems do not perpetuate or exacerbate existing inequalities. This raises questions about accountability: who is responsible when an algorithm produces biased outcomes?

Developers may argue that they are merely following the data, while organizations may deflect responsibility onto the technology itself. Moreover, transparency is a critical ethical consideration in addressing algorithmic bias. Stakeholders must have access to information about how algorithms are developed and how decisions are made.

This transparency fosters trust and allows for informed scrutiny of automated systems. Ethical frameworks must be established to guide the development and deployment of AI technologies, ensuring that they align with societal values and promote equity rather than discrimination.

Implementing Fairness and Transparency

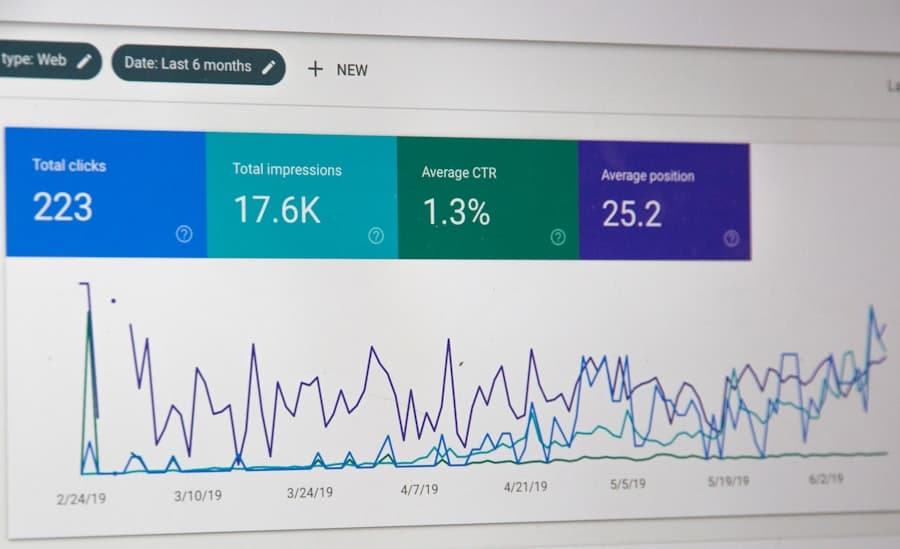

Implementing fairness and transparency in AI systems involves creating robust frameworks that prioritize ethical considerations throughout the lifecycle of algorithm development. Organizations should adopt clear guidelines for assessing fairness metrics during model evaluation. These metrics can include demographic parity, equal opportunity, and disparate impact analysis, which help quantify how different groups are affected by algorithmic decisions.

Transparency can be enhanced through documentation practices that detail the data sources, model design choices, and evaluation processes used in developing algorithms. This documentation should be accessible not only to technical teams but also to stakeholders outside the organization, including affected communities and regulatory bodies. By fostering an environment of openness, organizations can encourage collaboration and dialogue around algorithmic fairness, ultimately leading to more equitable outcomes.

Monitoring and Evaluating Bias in Automated Systems

Ongoing monitoring and evaluation are essential components of any strategy aimed at addressing algorithmic bias. Once an automated system is deployed, it is crucial to continuously assess its performance across different demographic groups to identify any emerging biases. This requires establishing feedback loops where users can report issues or discrepancies they observe in the system’s outputs.

Regular audits should be conducted using both quantitative metrics and qualitative assessments to gauge the impact of algorithms on various populations. For instance, organizations might employ third-party auditors to evaluate their systems independently, ensuring objectivity in identifying biases that internal teams may overlook. By committing to regular evaluations and being responsive to findings, organizations can adapt their algorithms over time to mitigate bias effectively.

The Future of AI and Bias Reduction

The future of AI holds promise for reducing bias through advancements in technology and a growing awareness of ethical considerations among developers and organizations. As machine learning techniques evolve, there is potential for more sophisticated methods that inherently account for fairness during model training. Innovations such as explainable AI (XAI) aim to make algorithms more interpretable, allowing stakeholders to understand how decisions are made and identify potential biases more easily.

Furthermore, as public awareness of algorithmic bias increases, there will likely be greater demand for accountability from organizations deploying AI technologies. Regulatory frameworks may emerge that require companies to demonstrate their commitment to fairness and transparency in their algorithms. This shift could lead to a more equitable technological landscape where automated systems serve all members of society fairly and justly.

In conclusion, addressing algorithmic bias is a complex challenge that requires a concerted effort from multiple stakeholders across various sectors. By understanding the sources and impacts of bias, leveraging AI for identification and mitigation, implementing ethical frameworks, and committing to ongoing monitoring and evaluation, we can work towards a future where technology enhances equity rather than perpetuating discrimination.

One related article that discusses the importance of reducing bias in automated systems is “The Best Software Testing Books” from enicomp.com. This article highlights the significance of thorough testing to ensure that software programs are free from biases that could potentially harm users. By implementing AI technology to help reduce algorithmic bias, developers can create more inclusive and fair automated systems.

FAQs

What is algorithmic bias?

Algorithmic bias refers to the systematic and repeatable errors in a computer system that create unfair outcomes, such as privileging one individual or group over another.

How can AI help reduce algorithmic bias in automated systems?

AI can help reduce algorithmic bias in automated systems by using techniques such as fairness-aware machine learning, bias detection and mitigation, and diverse training data to ensure that the algorithms are not unfairly favoring or discriminating against certain groups.

What are some examples of algorithmic bias in automated systems?

Examples of algorithmic bias in automated systems include biased hiring algorithms that favor certain demographics, facial recognition systems that misidentify individuals based on their race, and predictive policing algorithms that disproportionately target minority communities.

Why is it important to reduce algorithmic bias in automated systems?

It is important to reduce algorithmic bias in automated systems because biased algorithms can perpetuate and exacerbate existing inequalities and injustices, leading to unfair treatment and outcomes for certain individuals or groups.

What are the challenges in reducing algorithmic bias in automated systems?

Challenges in reducing algorithmic bias in automated systems include identifying and understanding the sources of bias, developing effective techniques for detecting and mitigating bias, and ensuring that the solutions do not introduce new forms of bias.