Neuromorphic processors represent a groundbreaking shift in the landscape of computing, designed to emulate the neural architecture of the human brain. Unlike traditional processors, which operate on a linear, sequential basis, neuromorphic systems leverage parallel processing capabilities that mirror the complex interconnections found in biological neural networks. This innovative approach allows for more efficient data processing, particularly in tasks that require pattern recognition, sensory processing, and decision-making.

The term “neuromorphic” itself derives from the combination of “neuro,” relating to the nervous system, and “morphic,” meaning shape or form, indicating a design philosophy that seeks to replicate the functional characteristics of biological systems. The development of neuromorphic processors is rooted in the desire to create machines that can learn and adapt in ways similar to humans. Traditional computing architectures, based on the von Neumann model, face significant limitations when it comes to handling large volumes of unstructured data or performing real-time analysis.

Neuromorphic processors, on the other hand, are engineered to process information in a manner akin to how neurons communicate through synapses, allowing for a more dynamic and flexible approach to computation. This paradigm shift not only enhances computational efficiency but also opens new avenues for artificial intelligence (AI) and machine learning applications.

Key Takeaways

- Neuromorphic processors are designed to emulate the structure and function of the human brain.

- They offer advantages like low power consumption and efficient parallel processing compared to traditional processors.

- These processors enhance AI and machine learning applications by enabling more brain-like computation.

- Challenges include hardware complexity and limited scalability in current neuromorphic designs.

- Ongoing research aims to overcome limitations, with promising future impacts on technology and society.

How Neuromorphic Processors Mimic the Human Brain

At the core of neuromorphic processors is their ability to replicate the fundamental operations of the human brain. The brain consists of approximately 86 billion neurons interconnected by trillions of synapses, forming a complex network that facilitates learning and memory. Neuromorphic processors utilize artificial neurons and synapses to create a similar architecture, enabling them to process information in a distributed manner.

Each artificial neuron can receive inputs from multiple sources, integrate these signals, and produce an output based on predefined thresholds, much like biological neurons. One of the key features of neuromorphic systems is their use of event-driven processing. In contrast to traditional processors that operate on clock cycles, neuromorphic chips respond to incoming signals only when they occur, significantly reducing power consumption and increasing efficiency.

This event-based approach allows for real-time processing of sensory data, making neuromorphic processors particularly well-suited for applications such as robotics and autonomous systems. For instance, a neuromorphic chip could process visual information from a camera in real-time, enabling a robot to navigate its environment more effectively by mimicking human-like perception.

Advantages of Neuromorphic Processors Over Traditional Processors

The advantages of neuromorphic processors over traditional computing architectures are manifold. One of the most significant benefits is their energy efficiency. Traditional processors consume substantial amounts of power, especially when performing complex calculations or processing large datasets.

In contrast, neuromorphic processors are designed to operate with minimal energy expenditure by leveraging their event-driven nature. This efficiency is particularly crucial in mobile and embedded systems where battery life is a critical consideration. Moreover, neuromorphic processors excel in handling tasks that involve pattern recognition and sensory data processing.

For example, in applications such as image recognition or natural language processing, traditional processors often require extensive training on large datasets using deep learning algorithms. Neuromorphic systems can learn from fewer examples and adapt more quickly to new information due to their inherent design principles. This capability not only accelerates the learning process but also enhances the robustness of AI systems in dynamic environments.

Applications of Neuromorphic Processors in AI and Machine Learning

The potential applications of neuromorphic processors in AI and machine learning are vast and varied. One prominent area is robotics, where these processors can enable machines to perceive and interact with their surroundings in a more human-like manner.

This capability enhances their ability to navigate complex environments and perform tasks that require fine motor skills. Another significant application lies in healthcare, where neuromorphic processors can be utilized for diagnostic purposes.

The ability to process information quickly and efficiently allows for timely interventions and personalized treatment plans. Additionally, neuromorphic systems can be integrated into wearable devices that monitor health metrics in real-time, providing valuable insights into an individual’s well-being.

Challenges and Limitations of Neuromorphic Processors

Despite their promising advantages, neuromorphic processors face several challenges and limitations that must be addressed for widespread adoption. One major hurdle is the complexity of programming these systems. Traditional programming paradigms may not be directly applicable to neuromorphic architectures, necessitating the development of new algorithms and frameworks tailored to their unique characteristics.

This requirement can pose a barrier for developers accustomed to conventional computing models. Furthermore, while neuromorphic processors excel in specific tasks such as pattern recognition, they may not perform as well in other areas where traditional processors shine. For example, tasks requiring high precision arithmetic or extensive numerical computations may still be better suited for conventional architectures.

Additionally, the current state of neuromorphic technology is still evolving, with ongoing research needed to enhance their capabilities and address issues related to scalability and integration with existing systems.

Current Developments and Research in Neuromorphic Processors

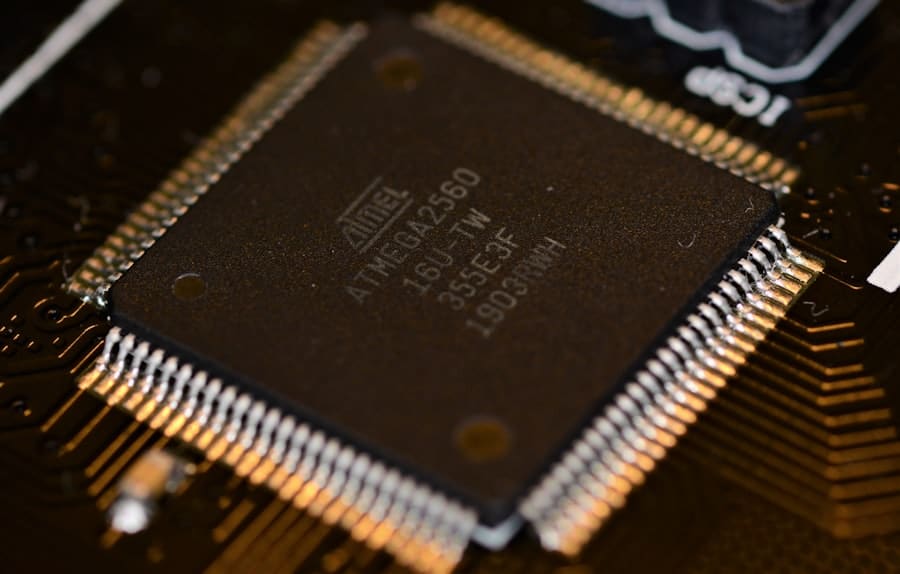

Research into neuromorphic processors has gained significant momentum in recent years, with various institutions and companies exploring innovative designs and applications. Notable examples include IBM’s TrueNorth chip, which features over a million programmable neurons and is designed for low-power cognitive computing tasks. Similarly, Intel’s Loihi chip incorporates a unique architecture that allows for on-chip learning and adaptation, enabling it to perform tasks such as visual recognition and sensor fusion.

Academic institutions are also at the forefront of neuromorphic research, with projects aimed at understanding the underlying principles of brain function and translating these insights into computational models. For instance, initiatives like the Human Brain Project in Europe seek to create detailed simulations of brain activity that can inform the design of neuromorphic systems. These collaborative efforts between academia and industry are crucial for advancing the field and unlocking the full potential of neuromorphic technology.

The Future of Neuromorphic Processors in Technology and Industry

Looking ahead, the future of neuromorphic processors appears promising as advancements continue to unfold across various sectors. In the realm of artificial intelligence, these processors could revolutionize how machines learn and interact with their environments. As they become more capable of processing complex sensory data in real-time, we may witness significant improvements in autonomous vehicles, smart cities, and personalized AI assistants.

Moreover, industries such as agriculture could benefit from neuromorphic technology through enhanced monitoring systems that analyze environmental conditions and optimize resource usage. In healthcare, the integration of neuromorphic processors into diagnostic tools could lead to earlier detection of diseases and improved patient outcomes. As research progresses and new applications emerge, it is likely that neuromorphic processors will play an increasingly central role in shaping the future of technology.

The Potential Impact of Neuromorphic Processors on Society

The advent of neuromorphic processors holds transformative potential for society at large. By mimicking the intricate workings of the human brain, these systems offer a new paradigm for computing that prioritizes efficiency, adaptability, and real-time processing capabilities. As they find applications across diverse fields—from robotics to healthcare—their impact could extend beyond mere technological advancements to influence how we interact with machines and understand intelligence itself.

As we continue to explore the possibilities presented by neuromorphic technology, it is essential to consider not only its benefits but also its ethical implications. The integration of such advanced systems into everyday life raises questions about privacy, security, and the nature of human-machine collaboration. Addressing these concerns will be crucial as we navigate the evolving landscape shaped by neuromorphic processors and their potential to redefine our relationship with technology.

In exploring the future of computing, the article on why neuromorphic processors are considered the next big leap highlights the potential for these advanced chips to revolutionize artificial intelligence and machine learning. For a deeper understanding of how innovative technology is shaping our digital landscape, you might find the article on the ambitious multimedia efforts of The Verge insightful. You can read it here: The Verge’s Ambitious Multimedia Effort.

FAQs

What are neuromorphic processors?

Neuromorphic processors are specialized computing systems designed to mimic the architecture and functioning of the human brain. They use artificial neurons and synapses to process information in a way that is more efficient and parallel compared to traditional processors.

How do neuromorphic processors differ from traditional processors?

Unlike traditional processors that use sequential processing and separate memory and computation units, neuromorphic processors integrate memory and processing elements, enabling parallel data processing and lower power consumption, similar to biological neural networks.

Why are neuromorphic processors considered the next big leap in computing?

Neuromorphic processors offer significant advantages in energy efficiency, speed, and the ability to handle complex, unstructured data. They are particularly well-suited for artificial intelligence, machine learning, and sensory processing tasks, potentially revolutionizing how computers learn and adapt.

What are the main applications of neuromorphic processors?

Neuromorphic processors are used in areas such as robotics, autonomous vehicles, real-time sensory data processing, brain-machine interfaces, and advanced AI systems that require efficient pattern recognition and decision-making capabilities.

Are neuromorphic processors commercially available?

Yes, several companies and research institutions have developed neuromorphic chips, such as Intel’s Loihi and IBM’s TrueNorth. While still emerging, these processors are increasingly being integrated into experimental and commercial AI applications.

What challenges do neuromorphic processors face?

Challenges include developing standardized programming models, scaling hardware for broader applications, integrating with existing computing infrastructure, and improving the robustness and reliability of neuromorphic systems.

How do neuromorphic processors impact energy consumption?

Neuromorphic processors significantly reduce energy consumption by mimicking the brain’s efficient processing methods, using event-driven computation and sparse data activity, which leads to lower power usage compared to conventional processors.

Can neuromorphic processors replace traditional CPUs and GPUs?

Neuromorphic processors are not designed to replace CPUs and GPUs entirely but to complement them by handling specific tasks like pattern recognition and sensory data processing more efficiently. They are part of a broader ecosystem of specialized computing technologies.

What is the future outlook for neuromorphic computing?

The future of neuromorphic computing is promising, with ongoing research aimed at improving hardware design, software frameworks, and applications. As technology matures, neuromorphic processors are expected to play a critical role in advancing AI and enabling new computing paradigms.