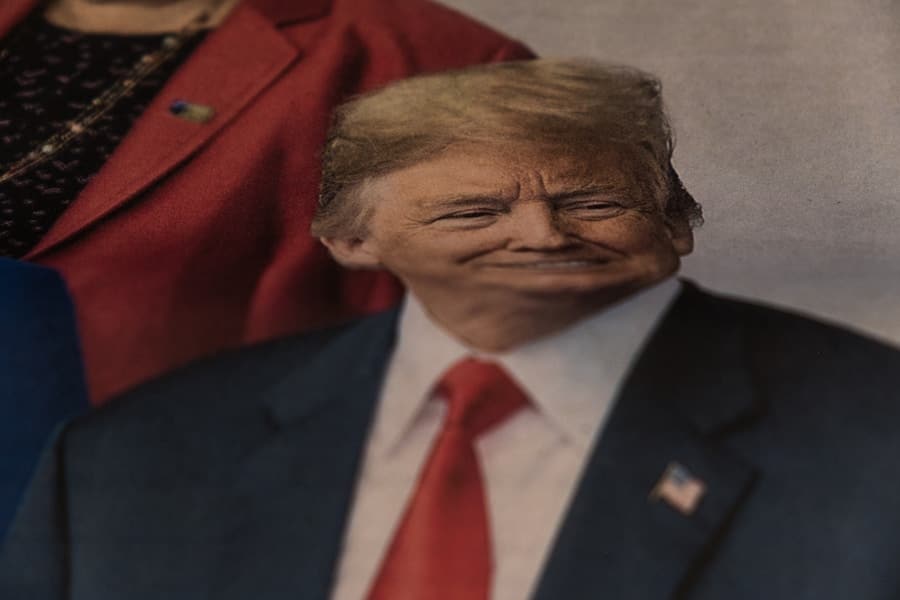

Deepfakes represent a significant technological advancement in the realm of artificial intelligence, where algorithms are employed to create hyper-realistic audio and video content that can convincingly mimic real individuals. This technology leverages deep learning techniques, particularly generative adversarial networks (GANs), to produce altered media that can be indistinguishable from authentic recordings. The implications of deepfakes extend beyond mere entertainment; they pose serious threats to personal reputations, political stability, and societal trust.

For instance, a deepfake video of a public figure making inflammatory statements can rapidly circulate on social media, potentially influencing public opinion and inciting unrest. Misinformation, on the other hand, encompasses a broader spectrum of false or misleading information that can be disseminated intentionally or unintentionally. While deepfakes are a specific type of misinformation, the term also includes fabricated news articles, manipulated images, and misleading statistics.

The proliferation of misinformation has been exacerbated by the rise of social media platforms, where content can be shared virally without rigorous fact-checking. The combination of deepfakes and misinformation creates a potent cocktail that can undermine democratic processes, erode trust in institutions, and create divisions within society. As technology continues to evolve, understanding the nuances of these phenomena becomes increasingly critical for individuals and organizations alike.

Key Takeaways

- Deepfakes are realistic but fake videos or audio created using AI technology, often used to spread misinformation.

- AI is increasingly being used to detect deepfakes, with advancements in machine learning and facial recognition technology.

- AI analyzes facial and vocal cues, such as blinking patterns and speech inconsistencies, to identify deepfakes.

- AI plays a crucial role in identifying misinformation and fake news by analyzing patterns and sources of information.

- Despite advancements, AI still faces challenges and limitations in detecting deepfakes and misinformation, such as the rapid evolution of deepfake technology.

The Rise of AI in Detecting Deepfakes

As deepfake technology has advanced, so too has the development of artificial intelligence tools designed to detect these manipulations. The urgency for such tools has grown in tandem with the increasing prevalence of deepfakes across various platforms. Researchers and tech companies have recognized that traditional methods of verification are insufficient in the face of sophisticated AI-generated content.

Consequently, a new wave of AI-driven detection systems has emerged, employing machine learning algorithms to analyze media for signs of manipulation. One notable example is the work being done by organizations like Facebook and Google, which have invested heavily in developing AI models capable of identifying deepfakes. These models are trained on vast datasets containing both authentic and manipulated media, allowing them to learn the subtle differences that may indicate tampering.

For instance, they may analyze inconsistencies in facial movements or unnatural audio synchronization that could suggest a video has been altered. As these technologies continue to evolve, they promise to enhance our ability to discern genuine content from deceptive fabrications.

How AI Analyzes Facial and Vocal Cues to Identify Deepfakes

AI detection systems utilize a variety of techniques to scrutinize both facial and vocal cues in order to identify deepfakes. Facial analysis often involves examining the micro-expressions and movements of an individual’s face during speech or action. For example, AI algorithms can detect discrepancies in how a person’s lips move compared to the audio being played.

If the synchronization is off or if the facial expressions do not match the emotional tone of the spoken words, it may indicate that the video has been manipulated. In addition to facial cues, vocal analysis plays a crucial role in detecting deepfakes. AI systems can analyze voice patterns, pitch, tone, and even breathing patterns to determine authenticity.

For instance, if a voice appears to be overly smooth or lacks natural fluctuations that occur during human speech, it may raise red flags for detection algorithms. By combining these analyses—facial movements and vocal characteristics—AI can create a comprehensive profile of what constitutes authentic human behavior, thereby enhancing its ability to identify deepfake content effectively.

The Role of AI in Identifying Misinformation and Fake News

Beyond detecting deepfakes, AI is also instrumental in combating misinformation and fake news more broadly.

For example, algorithms can assess the credibility of sources by cross-referencing claims with established databases or fact-checking organizations.

This capability is particularly valuable in an era where sensational headlines often overshadow factual reporting. Moreover, AI can track the spread of misinformation across social media platforms by analyzing user interactions and engagement metrics. By identifying which posts are gaining traction and how they are being shared, AI can help pinpoint potential misinformation hotspots.

This proactive approach enables platforms to intervene before false narratives gain widespread acceptance. For instance, Twitter has implemented AI-driven systems that flag tweets containing potentially misleading information for further review by human moderators. Such measures are essential in maintaining the integrity of information shared online.

Challenges and Limitations of AI in Detecting Deepfakes and Misinformation

Despite the advancements in AI detection technologies, several challenges and limitations persist. One significant hurdle is the continuous evolution of deepfake technology itself. As detection algorithms become more sophisticated, so too do the methods used to create deepfakes.

This cat-and-mouse game means that detection tools must constantly adapt to new techniques employed by malicious actors. For instance, some deepfake creators have begun using advanced techniques like style transfer or neural rendering to produce even more convincing content that may evade current detection methods. Another challenge lies in the potential for false positives and negatives in AI detection systems.

A false positive occurs when genuine content is incorrectly flagged as manipulated, while a false negative happens when a deepfake goes undetected. Both scenarios can have serious consequences; false positives can damage reputations or lead to unwarranted censorship, while false negatives can allow harmful misinformation to spread unchecked. Striking a balance between sensitivity and specificity in detection algorithms remains an ongoing challenge for researchers.

Ethical Considerations in Using AI to Combat Deepfakes and Misinformation

The deployment of AI technologies to combat deepfakes and misinformation raises several ethical considerations that must be addressed. One primary concern is the potential for overreach in content moderation practices. As platforms implement AI-driven detection systems, there is a risk that legitimate content may be censored or removed based on inaccurate assessments by algorithms.

This could infringe upon free speech rights and stifle open discourse on important issues. Additionally, there is the question of accountability when it comes to AI decision-making processes. If an AI system incorrectly identifies content as misleading or manipulative, who bears responsibility for the consequences?

The opacity of many AI algorithms complicates this issue further; users may not understand how decisions are made or what criteria are used for detection. Establishing clear guidelines and accountability measures is essential to ensure that AI technologies are used responsibly and ethically in the fight against misinformation.

The Future of AI in Fighting Deepfakes and Misinformation

Looking ahead, the future of AI in combating deepfakes and misinformation appears promising yet complex. As technology continues to advance, we can expect more refined detection tools that leverage cutting-edge machine learning techniques.

Moreover, collaboration between tech companies, governments, and civil society organizations will be crucial in developing comprehensive strategies to address these challenges. By pooling resources and expertise, stakeholders can create more robust frameworks for identifying and mitigating the impact of deepfakes and misinformation. Initiatives aimed at promoting transparency in AI algorithms will also play a vital role in fostering public trust in these technologies as they become integral components of our information ecosystem.

The Importance of Media Literacy in Conjunction with AI Detection

While AI technologies offer powerful tools for detecting deepfakes and misinformation, they should not be viewed as a panacea for these issues. Media literacy remains an essential component in equipping individuals with the skills necessary to critically evaluate information sources and discern credible content from deceptive narratives. Educational initiatives aimed at enhancing media literacy can empower users to approach information with skepticism and discernment.

Incorporating media literacy into educational curricula can help foster a generation that is better equipped to navigate the complexities of digital information landscapes. By teaching individuals how to verify sources, recognize bias, and understand the mechanics behind deepfake technology, we can cultivate a more informed public capable of resisting manipulation. Ultimately, combining AI detection capabilities with robust media literacy initiatives will create a more resilient society better prepared to confront the challenges posed by deepfakes and misinformation in an increasingly digital world.

In a recent article discussing The Role of AI in Identifying Deepfakes and Misinformation, it is crucial to consider the impact of technology on our daily lives. For example, parents may be interested in learning how to choose their child’s first smartphone, as highlighted in this article. Additionally, for NDIS providers looking for the best software solutions, a comprehensive guide can be found in this resource. Furthermore, individuals working remotely may benefit from discovering the best laptop for their needs, as detailed in this informative piece. It is evident that technology plays a significant role in various aspects of our lives, and staying informed is essential for making informed decisions.

FAQs

What is AI?

AI, or artificial intelligence, refers to the simulation of human intelligence in machines that are programmed to think and act like humans. This includes tasks such as learning, problem-solving, and decision-making.

What are deepfakes?

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness using AI technology. This can be used to create misleading or false information.

How does AI help in identifying deepfakes?

AI can be used to detect deepfakes by analyzing patterns and inconsistencies in the media, such as unnatural facial movements, mismatched audio and visual elements, and other anomalies that may indicate manipulation.

What role does AI play in combating misinformation?

AI can be used to analyze large volumes of data and identify patterns of misinformation, such as fake news or misleading content. It can also help in fact-checking and verifying the authenticity of information.

What are the limitations of AI in identifying deepfakes and misinformation?

AI technology is constantly evolving, but it still has limitations in detecting highly sophisticated deepfakes and misinformation. Additionally, AI algorithms may also have biases and limitations in understanding context and nuance.