In the rapidly evolving landscape of technology, digital contracts have emerged as a pivotal innovation, reshaping how agreements are formed, executed, and enforced. These contracts, often referred to as smart contracts, leverage digital technologies to automate and streamline the contractual process. Unlike traditional contracts that rely heavily on paper documentation and manual enforcement, digital contracts utilize code to define the terms of an agreement, allowing for automatic execution when predetermined conditions are met.

This shift not only enhances efficiency but also reduces the potential for human error and misinterpretation, which are common pitfalls in conventional contract management. However, the rise of digital contracts brings forth significant challenges, particularly concerning accountability in artificial intelligence (AI) systems. As AI technologies increasingly underpin the execution and management of these contracts, questions arise about who is responsible when things go awry.

The complexity of AI decision-making processes can obscure accountability, making it difficult to ascertain liability in cases of failure or dispute. This intersection of digital contracts and AI accountability necessitates a comprehensive understanding of both technological capabilities and the legal frameworks that govern them. As we delve deeper into this topic, it becomes clear that establishing robust mechanisms for accountability is essential to foster trust and reliability in digital transactions.

Key Takeaways

- Digital contracts and AI accountability are becoming increasingly important in today’s technology-driven world.

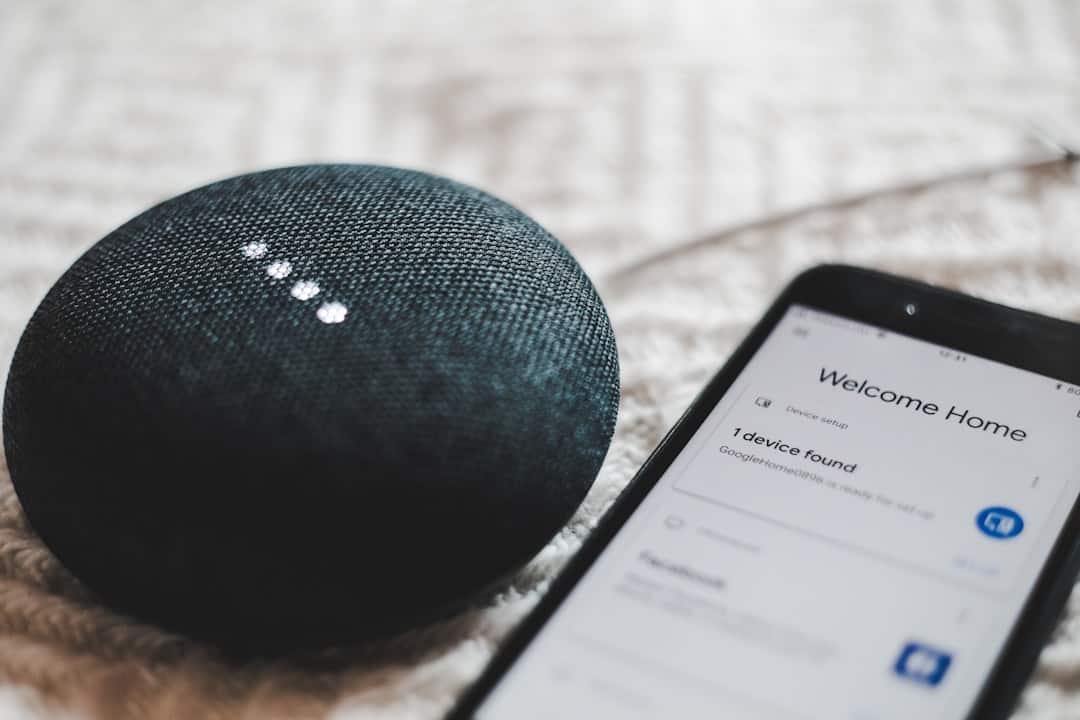

- Blockchain technology plays a crucial role in ensuring the security and transparency of digital contracts.

- Implementing transparency and auditability in AI systems is essential for building trust and accountability.

- Legal and ethical considerations are paramount in ensuring accountability in AI systems.

- Standardized protocols for digital contracts are crucial for ensuring consistency and reliability in transactions.

The Role of Blockchain Technology in Digital Contracts

Blockchain technology serves as a foundational element for many digital contracts, providing a decentralized and immutable ledger that enhances security and transparency. By utilizing blockchain, parties involved in a contract can ensure that all transactions are recorded in a tamper-proof manner, significantly reducing the risk of fraud or manipulation. Each transaction is linked to a previous one, creating a chain of records that is accessible to all authorized participants.

This transparency not only builds trust among parties but also simplifies the auditing process, as all actions taken under the contract can be traced back to their origins. Moreover, blockchain’s smart contract functionality allows for the automation of contract execution without the need for intermediaries. For instance, in real estate transactions, a smart contract can automatically transfer ownership once payment is confirmed on the blockchain.

This eliminates the need for traditional escrow services and reduces transaction times significantly. However, while blockchain technology offers numerous advantages, it is not without its challenges. Issues such as scalability, energy consumption, and regulatory compliance must be addressed to fully realize the potential of blockchain in digital contracts.

As organizations explore these technologies, they must also consider how to integrate them with existing legal frameworks to ensure enforceability and accountability.

Implementing Transparency and Auditability in AI Systems

Transparency and auditability are critical components in ensuring accountability within AI systems, particularly when these systems are employed in conjunction with digital contracts. The opaque nature of many AI algorithms can lead to a lack of understanding regarding how decisions are made, which poses significant risks in contractual contexts. To mitigate these risks, organizations must prioritize the development of AI systems that are not only effective but also interpretable.

This means creating models that allow stakeholders to understand the rationale behind decisions made by AI, thereby fostering trust and facilitating oversight. One approach to enhancing transparency is through the use of explainable AI (XAI) techniques. These methods aim to provide insights into the decision-making processes of AI systems by breaking down complex algorithms into understandable components.

For example, if an AI system denies a loan application based on certain criteria, XAI can elucidate which factors contributed to that decision and how they were weighted. This level of transparency is crucial when AI systems are integrated into digital contracts, as it allows parties to verify that decisions align with agreed-upon terms. Furthermore, implementing robust audit trails within AI systems can enable organizations to track changes over time and assess compliance with contractual obligations.

Legal and Ethical Considerations in AI Accountability

The intersection of AI technology and digital contracts raises numerous legal and ethical considerations that must be navigated carefully. One primary concern is the question of liability: if an AI system makes a decision that results in harm or loss, who is held accountable? Traditional legal frameworks often struggle to address this issue due to the autonomous nature of AI systems.

As such, there is an urgent need for new legal standards that clarify responsibility in cases involving AI-driven decisions. Ethically, organizations must grapple with the implications of deploying AI systems in contractual contexts. The potential for bias in AI algorithms can lead to unfair treatment of certain individuals or groups, raising questions about equity and justice.

For instance, if an AI system used in hiring processes inadvertently discriminates against candidates based on race or gender due to biased training data, it not only violates ethical norms but may also contravene anti-discrimination laws. Therefore, organizations must implement rigorous testing and validation processes to ensure that their AI systems operate fairly and transparently. Additionally, engaging stakeholders in discussions about ethical AI use can help foster a culture of accountability and responsibility.

The Importance of Standardized Protocols for Digital Contracts

As digital contracts gain traction across various industries, the establishment of standardized protocols becomes increasingly vital. Standardization can facilitate interoperability between different systems and platforms, ensuring that digital contracts can be executed seamlessly across diverse environments. Without common standards, organizations may face challenges related to compatibility and integration, which could hinder the widespread adoption of digital contracts.

Moreover, standardized protocols can enhance legal clarity by providing a consistent framework for interpreting digital contracts. This is particularly important given the varying legal interpretations of digital agreements across jurisdictions. By developing universally accepted standards for digital contracts—such as those governing data privacy, consent mechanisms, and dispute resolution—stakeholders can create a more predictable legal landscape that fosters confidence in digital transactions.

Organizations like the International Organization for Standardization (ISO) are already working towards establishing such standards, which could play a crucial role in shaping the future of digital contracts.

Addressing Bias and Fairness in AI Algorithms

Understanding the Sources of Bias

Bias can arise from various sources, including skewed training data and flawed algorithmic design. To combat bias effectively, organizations must adopt a multifaceted approach that includes diverse data collection practices and rigorous algorithmic testing. For instance, when developing an AI system for credit scoring based on historical data, it is essential to ensure that the dataset reflects a broad spectrum of demographics to avoid perpetuating existing inequalities.

Techniques for Mitigating Bias

Employing techniques such as adversarial debiasing can help identify and mitigate biases within algorithms before they are deployed in real-world applications. This approach can help organizations develop more reliable and fair AI systems that do not perpetuate discriminatory outcomes.

The Benefits of Fair AI Development

By prioritizing fairness in AI development, organizations not only enhance their ethical standing but also improve the overall reliability and acceptance of their digital contract systems. By addressing bias in AI algorithms, organizations can ensure that their digital contracts are enforced fairly and without discrimination, leading to increased trust and confidence in their systems.

The Role of Government Regulations in Ensuring AI Accountability

Government regulations play a crucial role in shaping the landscape of AI accountability and ensuring that digital contracts operate within a framework that protects consumers and promotes fair practices. As AI technologies continue to evolve at a rapid pace, regulatory bodies face the challenge of keeping up with innovations while safeguarding public interests. This necessitates a proactive approach to regulation that balances innovation with accountability.

Regulatory frameworks can establish clear guidelines for data usage, algorithmic transparency, and liability in cases involving AI-driven decisions. For example, the European Union’s General Data Protection Regulation (GDPR) has set a precedent by emphasizing data protection rights and requiring organizations to implement measures that ensure transparency in automated decision-making processes. Such regulations not only protect individuals from potential harms associated with biased or opaque AI systems but also encourage organizations to adopt best practices in their development and deployment strategies.

As governments worldwide grapple with these issues, collaborative efforts between regulators, industry stakeholders, and civil society will be essential to create effective policies that promote responsible AI use.

Collaborative Efforts in Shaping the Future of Digital Contracts and AI Accountability

The future of digital contracts and AI accountability hinges on collaborative efforts among various stakeholders—including technology developers, legal experts, policymakers, and civil society organizations. By fostering dialogue between these groups, it becomes possible to address complex challenges related to accountability while harnessing the benefits of technological advancements. Collaborative initiatives can lead to the development of best practices and guidelines that promote ethical AI use within digital contract frameworks.

For instance, industry consortia can bring together experts from different sectors to share insights on effective strategies for implementing transparent and accountable AI systems. Additionally, partnerships between academia and industry can facilitate research into innovative solutions for bias mitigation and algorithmic fairness. By pooling resources and expertise, stakeholders can work towards creating an ecosystem where digital contracts are not only efficient but also equitable and trustworthy.

Furthermore, public engagement is essential in shaping policies that govern digital contracts and AI accountability. By involving citizens in discussions about their rights and expectations regarding technology use, policymakers can develop regulations that reflect societal values and priorities. This inclusive approach ensures that technological advancements serve the broader public interest while fostering trust between individuals and organizations operating within this new digital landscape.

In conclusion, as we navigate the complexities surrounding digital contracts and AI accountability, it is imperative to recognize the interconnectedness of technology, law, ethics, and society. Through collaborative efforts aimed at establishing standards, enhancing transparency, addressing bias, and implementing effective regulations, we can create a future where digital contracts operate seamlessly alongside accountable AI systems—ultimately benefiting all stakeholders involved.

In a recent article discussing the future of digital contracts and ensuring accountability in AI use, it is important to consider the advancements in technology that are shaping our daily lives. One such advancement is the top 5 smartwatches of 2023, as highlighted in this article. These smartwatches are not only changing the way we track our health and fitness, but they are also revolutionizing the way we interact with technology on a daily basis. As we continue to rely on AI and digital contracts, it is crucial to stay informed about the latest technological developments, such as the best laptop for architects and the ongoing debate between Apple Watch vs Samsung Galaxy Watch, as discussed in this article.

FAQs

What are digital contracts?

Digital contracts, also known as smart contracts, are self-executing contracts with the terms of the agreement between buyer and seller directly written into lines of code. They are stored and executed on a blockchain, ensuring security and transparency.

What is AI accountability in digital contracts?

AI accountability in digital contracts refers to the responsibility and transparency in the use of artificial intelligence within the contract. This includes ensuring that AI systems are used ethically, fairly, and in compliance with regulations.

How can accountability be ensured in the use of AI in digital contracts?

Accountability in the use of AI in digital contracts can be ensured through the implementation of clear guidelines, regulations, and ethical frameworks. Additionally, transparency in the decision-making process of AI systems and the ability to audit and explain their actions are crucial for accountability.

What are the challenges in ensuring accountability in AI use in digital contracts?

Challenges in ensuring accountability in AI use in digital contracts include the complexity of AI systems, the lack of transparency in their decision-making processes, and the potential for bias and discrimination. Additionally, the rapid advancement of AI technology poses challenges in keeping regulations and guidelines up to date.

What is the future of digital contracts in ensuring AI accountability?

The future of digital contracts in ensuring AI accountability lies in the development of robust regulatory frameworks, the advancement of AI ethics and transparency, and the integration of technologies such as explainable AI and AI auditing tools. Collaboration between industry, government, and academia will also play a crucial role in shaping the future of AI accountability in digital contracts.