Transparency in artificial intelligence (AI) development is a cornerstone of trust and accountability in the technology. As AI systems increasingly influence critical aspects of society—from healthcare to criminal justice—understanding how these systems operate becomes paramount. Transparency allows stakeholders, including developers, users, and affected communities, to comprehend the decision-making processes of AI systems.

This understanding is essential for identifying potential biases, ensuring compliance with ethical standards, and fostering public trust. When AI systems are opaque, they can perpetuate existing inequalities and create new forms of discrimination, leading to significant societal repercussions. Moreover, transparency in AI development is crucial for regulatory compliance and ethical governance.

As governments and organizations worldwide grapple with the implications of AI technologies, there is a growing demand for clear guidelines and standards.

For instance, the European Union’s proposed regulations on AI emphasize the need for transparency to mitigate risks associated with high-stakes applications.

By fostering an environment where AI development is open and accountable, stakeholders can work collaboratively to address ethical concerns and promote responsible innovation.

Key Takeaways

- Transparency in AI development is crucial for building trust and ensuring ethical and fair practices.

- Open-source initiatives play a key role in promoting transparency by making AI development accessible and open to peer review.

- Collaboration and peer review are facilitated through open-source initiatives, allowing for diverse perspectives and expertise to contribute to AI development.

- Open-source initiatives help ensure ethical and fair AI practices by promoting accountability and addressing bias in AI development.

- Access to AI tools and resources is democratized through open-source initiatives, allowing for broader participation and innovation in AI development.

The Role of Open-Source Initiatives in Promoting Transparency

Open-source initiatives play a pivotal role in enhancing transparency within AI development. By making source code publicly available, these initiatives allow anyone to inspect, modify, and improve upon existing algorithms and models. This openness not only demystifies the inner workings of AI systems but also encourages a culture of scrutiny and peer review.

For example, projects like TensorFlow and PyTorch have become foundational tools in the AI community, enabling researchers and developers to build upon each other’s work while maintaining a clear understanding of how these systems function. Furthermore, open-source initiatives foster a collaborative environment where diverse perspectives can converge. When developers from various backgrounds contribute to a project, they bring unique insights that can help identify potential flaws or biases in the algorithms.

This collective intelligence is invaluable in creating more robust and equitable AI systems. The transparency afforded by open-source projects also empowers users to make informed decisions about the technologies they adopt, as they can evaluate the underlying code and methodologies rather than relying solely on vendor claims.

How Open-Source Initiatives Facilitate Collaboration and Peer Review

Collaboration is at the heart of open-source initiatives, enabling developers from around the globe to work together on AI projects. This collaborative spirit not only accelerates innovation but also enhances the quality of the resulting technologies. When multiple contributors engage in a project, they bring diverse skill sets and experiences that can lead to more comprehensive solutions.

For instance, the Hugging Face Transformers library has attracted contributions from researchers and practitioners worldwide, resulting in a rich repository of pre-trained models that cater to various natural language processing tasks. Peer review is another critical aspect facilitated by open-source initiatives. In traditional proprietary software development, code is often hidden from public scrutiny until a product is released.

In contrast, open-source projects invite continuous evaluation from the community. This process helps identify bugs, security vulnerabilities, and ethical concerns before they can cause harm. The collaborative nature of open-source development encourages contributors to challenge each other’s assumptions and methodologies, leading to more rigorous testing and validation of AI systems.

As a result, open-source initiatives not only produce high-quality software but also cultivate a culture of accountability among developers.

Ensuring Ethical and Fair AI Practices through Open-Source Initiatives

Ethical considerations are paramount in AI development, particularly as these technologies increasingly impact people’s lives. Open-source initiatives provide a framework for ensuring that ethical practices are embedded in the development process. By making algorithms accessible for public scrutiny, developers can engage with ethical guidelines and best practices collaboratively.

For example, projects like Fairness Indicators allow developers to assess their models for fairness across different demographic groups, promoting equitable outcomes in AI applications. Moreover, open-source initiatives can serve as platforms for establishing ethical standards within the AI community. By fostering discussions around responsible AI practices, these initiatives can help shape norms that prioritize fairness, accountability, and transparency.

The Algorithmic Justice League is an example of an organization that leverages open-source principles to advocate for equitable AI systems. Through community engagement and collaboration, such initiatives can drive meaningful change in how AI technologies are developed and deployed.

Open-Source Initiatives and Access to AI Tools and Resources

One of the most significant advantages of open-source initiatives is their ability to democratize access to AI tools and resources. Traditionally, access to advanced AI technologies has been limited to well-funded organizations or institutions with substantial resources. Open-source projects break down these barriers by providing free access to powerful tools that anyone can use.

This democratization fosters innovation by enabling individuals and smaller organizations to experiment with AI without incurring prohibitive costs. For instance, platforms like OpenAI’s Gym provide an open-source toolkit for developing and comparing reinforcement learning algorithms. By making such resources available to a broader audience, open-source initiatives empower aspiring researchers and developers to contribute to the field without needing extensive financial backing.

This increased accessibility not only accelerates technological advancement but also encourages diverse participation in AI development, leading to more inclusive solutions that reflect a wider range of perspectives.

Addressing Bias and Accountability in AI Development through Open-Source Initiatives

Bias in AI systems is a pressing concern that can have far-reaching consequences for individuals and communities. Open-source initiatives are uniquely positioned to address this issue by promoting transparency and accountability throughout the development process. By allowing external stakeholders to examine algorithms for potential biases, these initiatives create opportunities for corrective action before deployment.

For example, the IBM AI Fairness 360 toolkit provides developers with resources to detect and mitigate bias in machine learning models, fostering more equitable outcomes. Additionally, open-source projects encourage accountability by enabling users to track changes made to algorithms over time. This version control allows stakeholders to understand how decisions were made and identify any modifications that may introduce bias or ethical concerns.

The ability to audit code not only enhances trust among users but also holds developers accountable for their work. As a result, open-source initiatives contribute significantly to creating fairer AI systems that prioritize ethical considerations.

The Impact of Open-Source Initiatives on Democratizing AI Development

The democratization of AI development through open-source initiatives has profound implications for innovation and societal progress. By lowering barriers to entry, these initiatives enable a diverse range of contributors to participate in shaping the future of AI technology. This inclusivity fosters creativity and innovation as individuals from various backgrounds bring unique perspectives and ideas to the table.

For instance, grassroots projects like Fast.ai have empowered countless individuals—many without formal training in machine learning—to develop their skills and contribute meaningfully to the field. Moreover, democratizing access to AI tools encourages experimentation and exploration among non-traditional stakeholders. Community-driven projects often emerge from unexpected places, leading to innovative applications that may not have been considered by established organizations.

This grassroots approach can result in solutions tailored to specific community needs or challenges that larger entities may overlook. As such, open-source initiatives play a crucial role in ensuring that the benefits of AI technology are distributed more equitably across society.

Overcoming Challenges and Promoting Trust in AI through Open-Source Initiatives

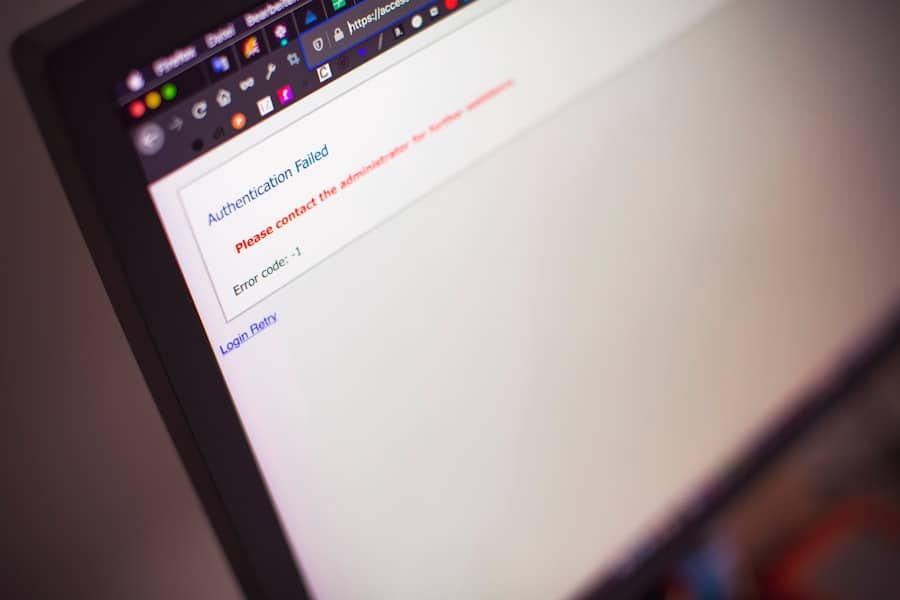

While open-source initiatives offer numerous benefits for promoting transparency and collaboration in AI development, they are not without challenges. One significant hurdle is ensuring that contributors adhere to ethical standards and best practices throughout the development process. As projects grow in complexity and scale, maintaining quality control becomes increasingly difficult.

To address this issue, many open-source communities implement governance structures that establish guidelines for contributions and promote responsible behavior among participants. Building trust in open-source AI systems also requires ongoing engagement with users and stakeholders. Developers must actively communicate their methodologies, decisions, and updates to foster transparency and accountability.

Initiatives like the OpenAI Charter exemplify this commitment by outlining principles that guide their research and development efforts while inviting public feedback on their practices. By prioritizing communication and collaboration with the broader community, open-source initiatives can cultivate trust among users while addressing concerns related to bias, ethics, and accountability. In conclusion, open-source initiatives represent a powerful force for promoting transparency in AI development while addressing critical ethical concerns.

By fostering collaboration, democratizing access to resources, and encouraging accountability among developers, these initiatives pave the way for more equitable and responsible AI technologies that benefit society as a whole.

In a recent article on enicomp.com, the importance of transparency in AI development was highlighted in the context of open-source initiatives. This aligns with the trend of ensuring transparency and accountability in technology, as discussed in another article on the site about the top trends in e-commerce business. To learn more about how open-source initiatives can impact AI development, check out the article here.

FAQs

What is open-source software?

Open-source software is a type of computer software in which the source code is made available to the public for use, modification, and distribution. This allows for collaboration and transparency in the development process.

What are open-source initiatives in AI development?

Open-source initiatives in AI development involve making the source code, algorithms, and models for AI projects publicly available. This allows for transparency, collaboration, and community involvement in the development of AI technologies.

How do open-source initiatives ensure transparency in AI development?

Open-source initiatives ensure transparency in AI development by allowing anyone to access and review the source code, algorithms, and models used in AI projects. This helps to identify and address any biases, errors, or ethical concerns in the development process.

What are the benefits of open-source initiatives in AI development?

Some benefits of open-source initiatives in AI development include increased transparency, collaboration, and community involvement. It also allows for the sharing of knowledge and resources, which can accelerate the development of AI technologies and make them more accessible to a wider audience.

What are some examples of open-source initiatives in AI development?

Some examples of open-source initiatives in AI development include projects like TensorFlow, PyTorch, and OpenAI. These initiatives provide open access to their source code, algorithms, and models, allowing for transparency and collaboration in the development of AI technologies.