Neuromorphic chips represent a groundbreaking advancement in the field of computing, designed to emulate the neural structure and functioning of the human brain. Unlike traditional computing architectures that rely on binary logic and linear processing, neuromorphic systems leverage the principles of neurobiology to create more efficient and adaptive computational models. This innovative approach aims to bridge the gap between biological intelligence and artificial intelligence, offering a new paradigm for processing information that is more aligned with how living organisms operate.

The development of neuromorphic chips is not merely an academic exercise; it has profound implications for various fields, including robotics, autonomous systems, and cognitive computing. By mimicking the brain’s architecture, these chips can process vast amounts of data in real-time while consuming significantly less power than conventional processors.

This efficiency is particularly crucial in an era where energy consumption is a growing concern in technology. As researchers continue to explore the potential of neuromorphic computing, it becomes increasingly clear that these chips could revolutionize how machines learn, adapt, and interact with their environments.

Key Takeaways

- Neuromorphic chips are a new type of computer chip designed to mimic the functionality of the human brain.

- The human brain functions through the use of neurons, which communicate with each other through electrical signals.

- Neuromorphic chips mimic the functionality of the human brain by using artificial neurons and synapses to process information in a similar way.

- Neuromorphic chips have a wide range of potential applications, including in the fields of artificial intelligence, robotics, and healthcare.

- While neuromorphic chips offer advantages such as low power consumption and high processing speed, they also have limitations in terms of scalability and programming complexity.

The Basics of Human Brain Functionality

To understand how neuromorphic chips function, it is essential to first grasp the basics of human brain functionality. The human brain is an incredibly complex organ composed of approximately 86 billion neurons, each connected to thousands of other neurons through synapses. This intricate network allows for the transmission of electrical signals and chemical messages, facilitating communication between different parts of the brain and enabling various cognitive functions such as perception, memory, and decision-making.

The brain operates through a combination of parallel processing and distributed computing, allowing it to handle multiple tasks simultaneously and efficiently. Neurons communicate through action potentials, which are rapid electrical impulses that travel along their axons. When a neuron receives sufficient stimulation from other neurons, it generates an action potential that propagates down its axon to transmit information to other neurons.

This process is influenced by synaptic strength, which can change over time through mechanisms such as long-term potentiation and long-term depression.

The dynamic nature of neural connections is a key feature that distinguishes biological systems from traditional computing architectures.

How Neuromorphic Chips Mimic Human Brain Functionality

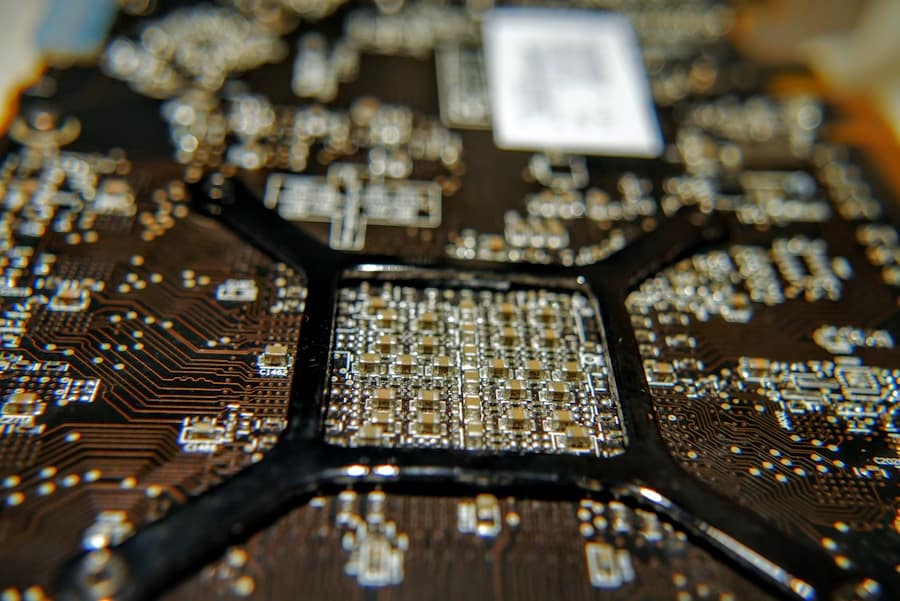

Neuromorphic chips are designed to replicate the fundamental principles of neural processing found in the human brain. They achieve this by utilizing specialized circuits that mimic the behavior of neurons and synapses. For instance, instead of relying on conventional transistors that operate in a binary fashion (on or off), neuromorphic chips employ analog circuits that can represent a continuum of values.

This allows them to process information in a manner similar to how biological neurons transmit signals through graded potentials rather than discrete spikes. One notable example of neuromorphic architecture is IBM’s TrueNorth chip, which consists of over a million programmable neurons and billions of synapses. TrueNorth operates on a spiking neural network model, where information is encoded in the timing of spikes rather than their amplitude.

This approach enables the chip to perform complex tasks such as image recognition and pattern detection with remarkable efficiency. By leveraging event-driven processing, TrueNorth can remain in a low-power state until it receives input, significantly reducing energy consumption compared to traditional processors that continuously operate at full capacity.

Applications of Neuromorphic Chips

The applications of neuromorphic chips are vast and varied, spanning numerous domains where real-time processing and adaptability are crucial. In robotics, for instance, neuromorphic chips can enhance sensory perception by enabling robots to process visual and auditory information more like humans do. This capability allows robots to navigate complex environments, recognize objects, and respond to dynamic stimuli with greater agility and accuracy.

For example, researchers have developed robotic systems equipped with neuromorphic vision sensors that can detect motion and recognize faces in real-time, paving the way for more intuitive human-robot interactions. In the realm of healthcare, neuromorphic chips hold promise for advancing medical diagnostics and personalized treatment plans. By analyzing vast datasets from medical imaging or genomic sequencing, these chips can identify patterns that may elude traditional algorithms.

For instance, neuromorphic systems could assist in early cancer detection by recognizing subtle changes in imaging data that indicate tumor growth. Additionally, their ability to learn from new data continuously allows for adaptive treatment strategies tailored to individual patients’ needs.

Advantages and Limitations of Neuromorphic Chips

The advantages of neuromorphic chips are manifold, particularly in terms of energy efficiency and processing speed. By mimicking the brain’s architecture, these chips can perform complex computations with significantly lower power consumption than conventional processors. This efficiency is especially beneficial for mobile devices and IoT applications where battery life is paramount.

Furthermore, neuromorphic chips excel in tasks requiring real-time processing and adaptability, making them ideal for applications such as autonomous vehicles and smart sensors. However, despite their potential, neuromorphic chips also face limitations that must be addressed for widespread adoption. One significant challenge is the complexity of programming these systems.

Traditional programming paradigms may not be directly applicable to neuromorphic architectures, necessitating the development of new algorithms and tools tailored for spiking neural networks. Additionally, while neuromorphic chips excel at specific tasks, they may struggle with general-purpose computing tasks that conventional processors handle efficiently. This limitation raises questions about their integration into existing computing ecosystems and whether they can complement or replace traditional architectures.

Current Developments and Future Prospects

Advancements in Neuromorphic Technology

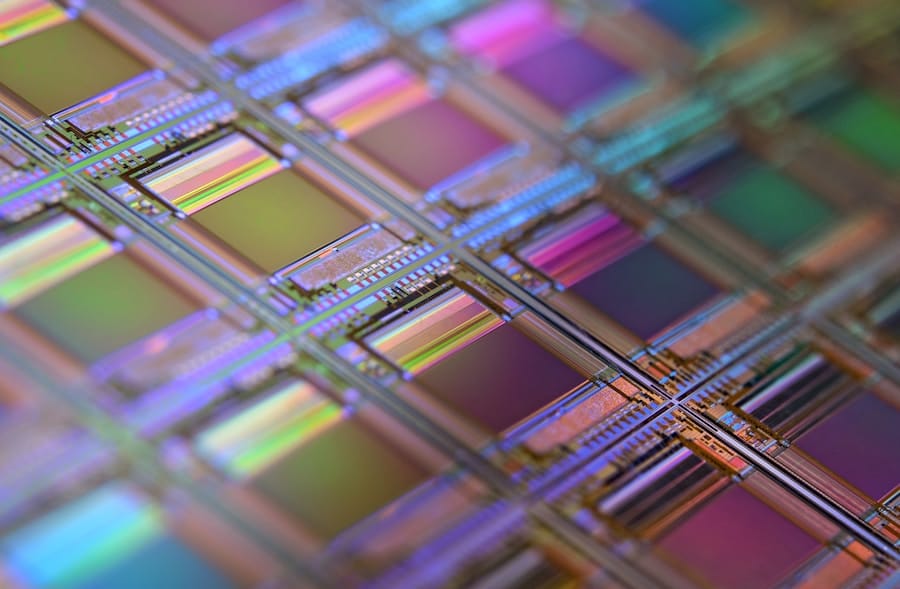

Companies like Intel are investing heavily in neuromorphic technology with their Loihi chip, which features a self-learning capability that allows it to adapt its behavior based on experience without requiring extensive retraining. This innovation could lead to more autonomous systems capable of learning from their environments in real-time.

Academic Breakthroughs

Moreover, academic institutions are also making significant strides in this field. For instance, researchers at Stanford University have developed a neuromorphic chip that can simulate the behavior of a small mammal’s brain, providing insights into how biological systems process information. Such advancements not only enhance our understanding of neuroscience but also pave the way for creating more sophisticated artificial intelligence systems.

Future Prospects

Looking ahead, the future prospects for neuromorphic chips appear promising. As technology continues to evolve, we may witness the emergence of hybrid systems that combine traditional computing with neuromorphic architectures, leveraging the strengths of both approaches. This integration could lead to breakthroughs in various fields, including machine learning, robotics, and cognitive computing.

Ethical and Privacy Considerations

The rise of neuromorphic chips also brings forth important ethical and privacy considerations that must be addressed as these technologies become more prevalent. One primary concern revolves around data privacy and security. As neuromorphic systems become integrated into everyday applications—such as surveillance cameras or personal assistants—there is a risk that sensitive information could be misused or inadequately protected.

Ensuring robust security measures will be essential to safeguard user data from potential breaches or unauthorized access. Additionally, the deployment of neuromorphic technology raises questions about accountability and decision-making processes in autonomous systems. For instance, if a self-driving car equipped with a neuromorphic chip makes a decision that results in an accident, determining liability becomes complex.

The opacity of machine learning algorithms further complicates this issue; understanding how these systems arrive at specific decisions is crucial for establishing trust between humans and machines. Furthermore, there are broader societal implications associated with the widespread adoption of neuromorphic technology. As machines become increasingly capable of performing tasks traditionally reserved for humans—such as caregiving or decision-making—there may be significant shifts in employment patterns and social dynamics.

Addressing these challenges will require thoughtful consideration from policymakers, technologists, and ethicists alike.

Conclusion and Implications for the Future

The exploration of neuromorphic chips signifies a pivotal moment in the evolution of computing technology. By drawing inspiration from the human brain’s architecture and functionality, these chips offer unprecedented opportunities for enhancing machine learning capabilities while addressing critical challenges such as energy efficiency and real-time processing. As researchers continue to innovate within this domain, we can anticipate transformative applications across various sectors—from healthcare to robotics—ultimately reshaping our interaction with technology.

However, as we embrace these advancements, it is imperative to remain vigilant about the ethical implications they entail. Balancing innovation with responsibility will be crucial in ensuring that neuromorphic technology serves humanity positively while safeguarding individual rights and societal values. The journey toward realizing the full potential of neuromorphic chips is just beginning; navigating this path thoughtfully will determine how we harness their capabilities for future generations.

If you are interested in exploring the world of technology further, you may want to check out this article on TechCrunch, a website founded by Michael Arrington and later sold to AOL. This article delves into the history and impact of this influential tech news platform, providing valuable insights into the evolution of the digital landscape. It’s a fascinating read for anyone looking to deepen their understanding of the tech industry and its key players.

FAQs

What are neuromorphic chips?

Neuromorphic chips are a type of microprocessor that is designed to mimic the functionality of the human brain. They are built using electronic circuits that emulate the behavior of neurons and synapses, allowing them to process information in a way that is similar to the human brain.

How do neuromorphic chips mimic human brain functionality?

Neuromorphic chips mimic human brain functionality by using spiking neural networks, which are a type of artificial neural network that closely resembles the way neurons in the brain communicate with each other. This allows the chips to process information in parallel, handle complex patterns, and adapt to new information, similar to the human brain.

What are the potential applications of neuromorphic chips?

Neuromorphic chips have the potential to be used in a wide range of applications, including robotics, autonomous vehicles, medical devices, and more. They could also be used to improve the efficiency of machine learning algorithms and enable new types of computing tasks that are currently difficult for traditional processors to handle.

What are the advantages of neuromorphic chips over traditional processors?

Neuromorphic chips offer several advantages over traditional processors, including lower power consumption, faster processing of sensory data, and the ability to learn and adapt to new information in real time. They also have the potential to perform certain types of tasks, such as pattern recognition and decision making, more efficiently than traditional processors.

Are there any challenges in developing neuromorphic chips?

Developing neuromorphic chips presents several challenges, including designing electronic circuits that accurately mimic the behavior of neurons and synapses, as well as optimizing the chips for specific applications. Additionally, there are challenges in scaling up the technology to create larger and more complex neuromorphic systems.