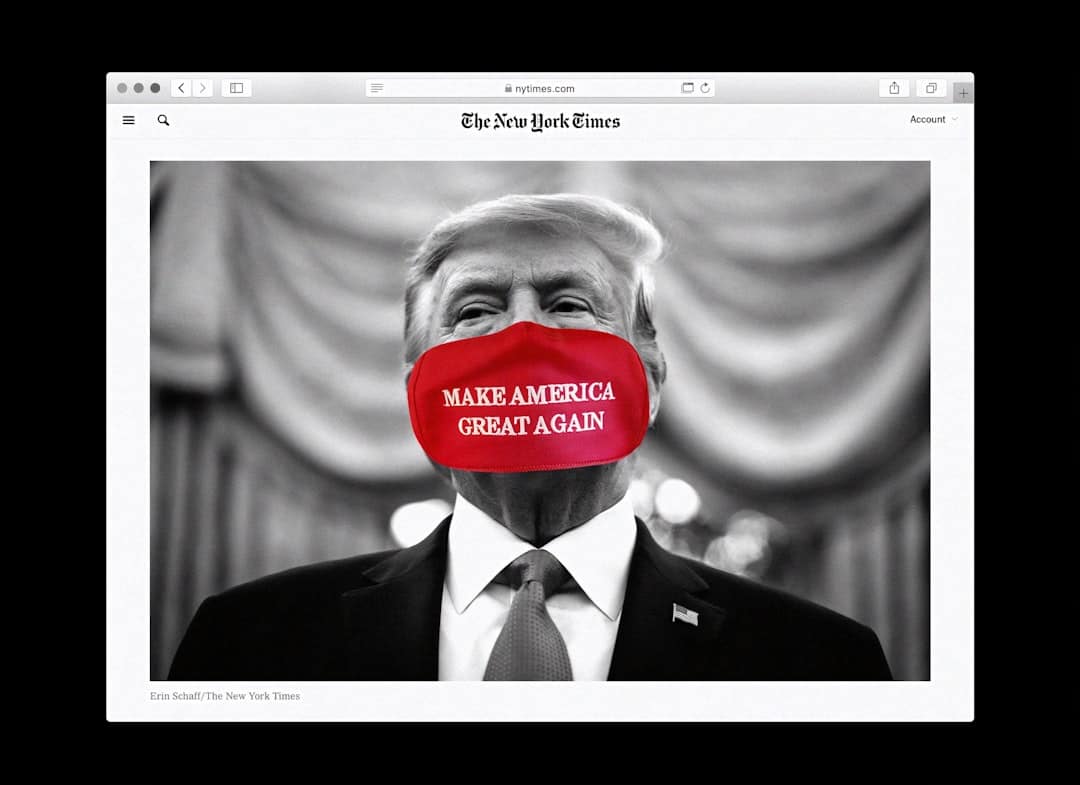

Deepfakes represent a significant technological advancement that has garnered both fascination and concern in equal measure. At their core, deepfakes utilize artificial intelligence, particularly deep learning algorithms, to create hyper-realistic audio and video content that can convincingly mimic real people. This technology leverages vast datasets of images and sounds to train models capable of generating new content that appears authentic.

The implications of deepfakes are profound, as they can be used for entertainment, art, and education, but they also pose serious risks, particularly in the realms of misinformation, privacy invasion, and potential political manipulation. The term “deepfake” itself is a portmanteau of “deep learning” and “fake,” highlighting the dual nature of this technology. While it can be harnessed for creative purposes, such as in film and video games, its misuse has raised alarms among governments, tech companies, and civil society.

The ability to fabricate realistic videos of individuals saying or doing things they never actually did can lead to significant consequences, including the erosion of trust in media and the potential for reputational damage. As deepfake technology continues to evolve, it becomes increasingly challenging to discern what is real from what is artificially generated, prompting urgent discussions about the ethical implications and necessary safeguards.

Key Takeaways

- Deepfakes are realistic-looking fake videos or audio created using artificial intelligence and machine learning techniques.

- Governments are taking steps to combat deepfakes by investing in technology, research, and legislative measures.

- Legislative measures and regulations are being implemented to address the spread and misuse of deepfake technology.

- Investment in technology and research is crucial for developing tools to detect and prevent the creation and dissemination of deepfakes.

- International collaboration and partnerships are essential for addressing the global nature of the deepfake threat and developing coordinated responses.

Government Efforts to Combat Deepfakes

Governments around the world have recognized the potential dangers posed by deepfakes and are taking steps to address these challenges. In the United States, for instance, various federal agencies have begun to explore regulatory frameworks aimed at mitigating the risks associated with deepfake technology. The Department of Homeland Security has expressed concerns about the use of deepfakes in disinformation campaigns that could undermine national security or influence elections.

As a response, initiatives have been launched to develop detection tools that can identify manipulated media and provide users with reliable information about the authenticity of content. In addition to federal efforts, state governments have also taken action. Several states have enacted laws specifically targeting the malicious use of deepfakes, particularly in contexts such as revenge porn or election interference.

For example, California passed a law making it illegal to use deepfake technology to harm or defraud others, reflecting a growing recognition of the need for legal frameworks that address the unique challenges posed by this technology. These legislative measures are crucial in establishing a baseline for accountability and deterrence against those who would exploit deepfakes for nefarious purposes.

Legislative Measures and Regulations

The legislative landscape surrounding deepfakes is rapidly evolving as lawmakers grapple with the implications of this technology. In addition to California’s pioneering legislation, other states have followed suit with their own laws aimed at curbing the misuse of deepfakes. For instance, Texas has implemented regulations that criminalize the use of deepfakes to harm individuals or influence elections.

These laws not only serve as a deterrent but also provide victims with legal recourse against those who create or distribute harmful deepfake content. On a federal level, there have been discussions about introducing comprehensive legislation that addresses deepfakes more broadly. Proposals have included measures that would require platforms hosting user-generated content to implement robust verification processes for videos and images.

However, crafting effective legislation poses challenges; lawmakers must balance the need for regulation with the protection of free speech and innovation in technology.

Investment in Technology and Research

Recognizing the potential threats posed by deepfakes, both public and private sectors are investing heavily in research and technology aimed at detecting and mitigating their impact. Academic institutions are at the forefront of this effort, conducting studies on machine learning algorithms that can identify manipulated media with increasing accuracy. For example, researchers at Stanford University have developed tools that analyze video frames for inconsistencies that may indicate tampering, such as unnatural facial movements or mismatched audio-visual cues.

In addition to academic research, tech companies are also dedicating resources to combat deepfakes. Major players like Facebook and Google have launched initiatives focused on developing advanced detection technologies. Facebook’s Deepfake Detection Challenge encourages researchers to create algorithms capable of identifying deepfake videos with high precision.

This collaborative approach not only fosters innovation but also helps establish a community of experts dedicated to addressing the challenges posed by synthetic media.

International Collaboration and Partnerships

The global nature of the internet necessitates international collaboration in addressing the challenges posed by deepfakes. Countries are beginning to recognize that unilateral efforts may be insufficient in combating a problem that transcends borders. Organizations such as INTERPOL and the European Union are working together to develop frameworks for sharing information and best practices related to deepfake detection and regulation.

One notable example of international collaboration is the establishment of partnerships between governments and tech companies across different nations. These partnerships aim to create standardized protocols for identifying and managing deepfake content while fostering an environment conducive to innovation in detection technologies. By pooling resources and expertise, countries can enhance their collective ability to respond to the evolving landscape of synthetic media threats.

Public Awareness and Education Campaigns

Public awareness campaigns play a crucial role in combating the spread of deepfakes by educating individuals about the technology’s capabilities and potential risks. Governments, non-profit organizations, and tech companies are increasingly investing in initiatives designed to inform the public about how to recognize deepfake content and understand its implications. For instance, campaigns may include workshops, online resources, and social media outreach aimed at demystifying deepfakes and empowering individuals to critically evaluate the media they consume.

Educational institutions are also stepping up efforts to incorporate media literacy into their curricula. By teaching students how to discern credible sources from manipulated content, schools can equip future generations with the skills necessary to navigate an increasingly complex digital landscape. These educational initiatives not only raise awareness about deepfakes but also foster critical thinking skills that are essential in an era where misinformation can spread rapidly.

Challenges and Limitations in Tackling Deepfakes

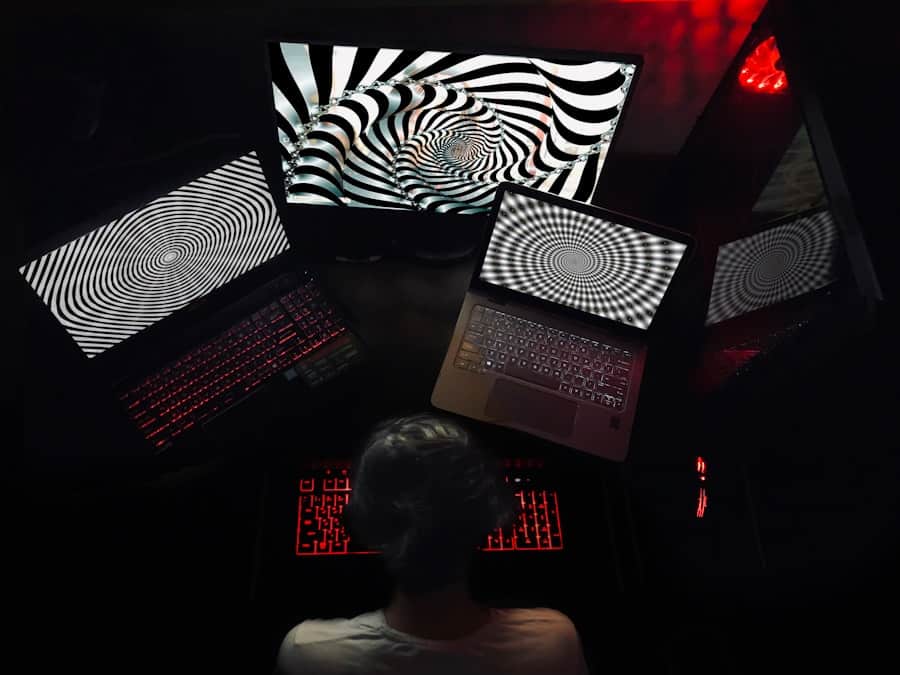

Despite ongoing efforts to combat deepfakes, significant challenges remain in effectively addressing this issue. One major hurdle is the rapid pace at which deepfake technology is advancing. As detection methods improve, so too do the techniques used by those creating deepfakes.

This cat-and-mouse dynamic complicates efforts to develop reliable detection tools that can keep up with evolving manipulation techniques. Moreover, there are inherent limitations in legislative measures aimed at regulating deepfakes. Crafting laws that effectively address the nuances of this technology without infringing on free speech rights is a delicate balance.

Future Outlook and Recommendations

Looking ahead, it is clear that addressing the challenges posed by deepfakes will require a multifaceted approach involving collaboration among governments, tech companies, researchers, and civil society. Continued investment in research and development is essential for creating more effective detection technologies that can keep pace with advancements in deepfake creation methods. Furthermore, fostering international cooperation will be crucial in establishing global standards for addressing synthetic media threats.

Public awareness campaigns should remain a priority as well; educating individuals about the risks associated with deepfakes will empower them to critically evaluate the content they encounter online. Finally, ongoing dialogue among stakeholders will be vital in shaping effective legislative measures that protect individuals from harm while preserving fundamental rights. By taking these steps, society can better navigate the complexities introduced by deepfake technology while harnessing its potential for positive applications.

In the ongoing battle against the rising threat of deepfakes, governments worldwide are implementing various strategies to mitigate the risks associated with this technology. While the focus is primarily on regulatory measures and technological advancements to detect and prevent deepfakes, it’s also crucial to consider the role of social media platforms in this fight. An interesting perspective on how software can aid in managing content on these platforms can be found in the article The Best Software for Social Media Content: A Comprehensive Guide. This article provides insights into the tools that can help monitor and manage digital content, which is essential in identifying and controlling the spread of deepfakes.

FAQs

What are deepfakes?

Deepfakes are synthetic media in which a person in an existing image or video is replaced with someone else’s likeness using artificial intelligence and machine learning techniques.

Why are deepfakes considered a threat?

Deepfakes are considered a threat because they have the potential to spread misinformation, manipulate public opinion, and damage the reputation of individuals and institutions.

How are governments tackling the deepfake threat?

Governments are tackling the deepfake threat through a combination of legislative measures, investment in research and development of deepfake detection technologies, and international collaboration to address the issue.

What legislative measures have been taken to address deepfakes?

Some governments have introduced or are considering legislation to criminalize the creation and distribution of deepfakes for malicious purposes. These laws aim to deter the use of deepfakes for fraudulent or deceptive activities.

What technologies are being developed to detect deepfakes?

Researchers and tech companies are developing deepfake detection tools that use machine learning algorithms to analyze videos and images for signs of manipulation. These tools aim to identify and flag potential deepfakes before they can cause harm.

How important is international collaboration in addressing the deepfake threat?

International collaboration is crucial in addressing the deepfake threat because deepfakes can easily cross borders and impact multiple countries. By working together, governments can share best practices, coordinate efforts, and develop a unified approach to combatting deepfakes.