In the digital age, the proliferation of information has transformed the way individuals consume news and engage with media. However, this vast landscape of information is not without its pitfalls. Fake news, defined as misinformation or disinformation presented as legitimate news, has emerged as a significant concern for societies worldwide.

The rise of social media platforms has facilitated the rapid spread of false narratives, often leading to real-world consequences such as political polarization, public health crises, and social unrest. The ease with which information can be shared online has blurred the lines between credible journalism and sensationalist reporting, making it increasingly challenging for individuals to discern fact from fiction. Online manipulation extends beyond mere misinformation; it encompasses a range of tactics designed to influence public opinion and behavior.

This manipulation can take various forms, including clickbait headlines, deepfake videos, and coordinated disinformation campaigns. The consequences of such tactics are profound, as they can shape perceptions, alter voting behaviors, and even incite violence. As society grapples with these challenges, the need for effective solutions to combat fake news and online manipulation has never been more pressing.

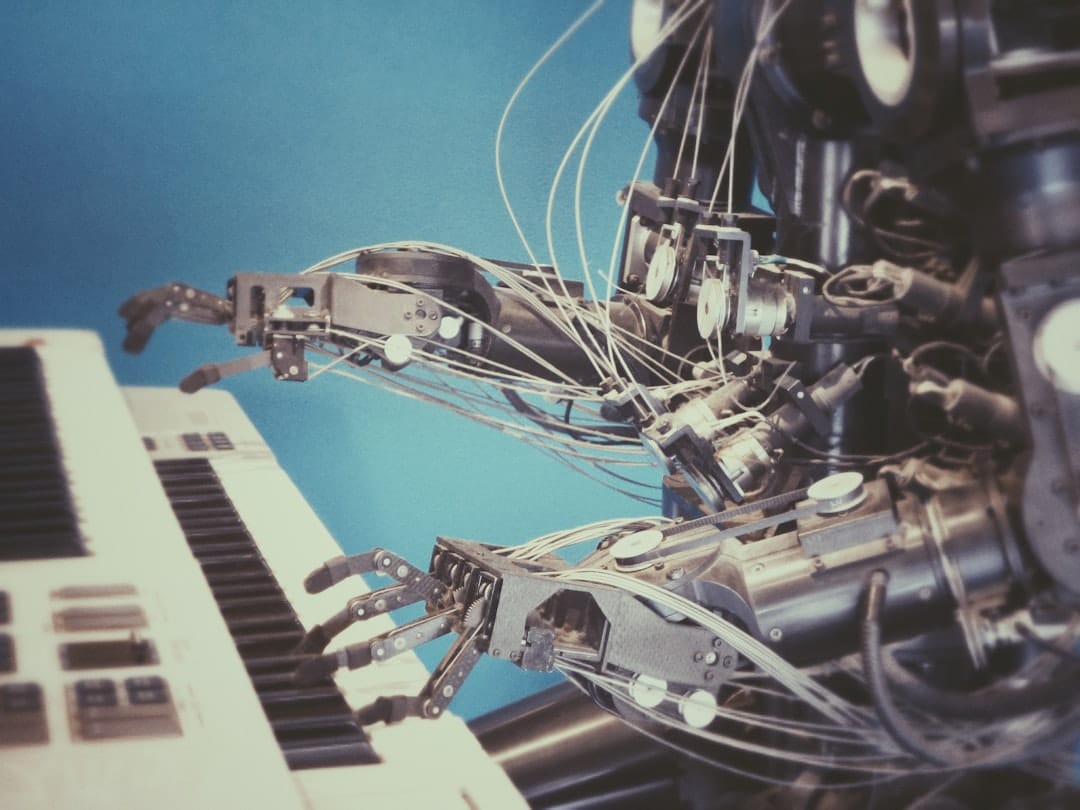

In this context, artificial intelligence (AI) has emerged as a powerful tool in the fight against misinformation, offering innovative approaches to detect and mitigate the spread of fake news.

Key Takeaways

- AI plays a crucial role in identifying and combating fake news through advanced detection techniques.

- Natural Language Processing and Machine Learning are key technologies used to analyze and detect misleading content.

- AI can recognize various manipulative tactics employed in fake news, enhancing detection accuracy.

- Despite its potential, AI faces challenges and ethical concerns in reliably detecting fake news.

- Ongoing advancements and case studies demonstrate promising future developments in AI-driven fake news detection.

The Role of Artificial Intelligence in Detecting Fake News

Artificial intelligence plays a pivotal role in the detection of fake news by leveraging advanced algorithms and data analysis techniques to identify patterns indicative of misinformation. AI systems can process vast amounts of data at unprecedented speeds, allowing them to analyze news articles, social media posts, and other online content for signs of manipulation. By employing machine learning models trained on large datasets of both genuine and fake news, these systems can learn to recognize the subtle nuances that differentiate credible information from falsehoods.

One of the key advantages of using AI in this context is its ability to adapt and improve over time. As new forms of misinformation emerge and tactics evolve, AI systems can be retrained with updated data to enhance their detection capabilities. This adaptability is crucial in a landscape where fake news tactics are constantly changing.

Moreover, AI can assist in flagging potentially misleading content for further review by human fact-checkers, thereby streamlining the process of identifying and addressing misinformation before it spreads widely.

Natural Language Processing and Machine Learning in Fake News Detection

Natural Language Processing (NLP) is a subset of artificial intelligence that focuses on the interaction between computers and human language. In the realm of fake news detection, NLP techniques are employed to analyze the text of articles and social media posts for linguistic patterns that may indicate deception.

By examining these features, NLP algorithms can assess the credibility of a source or the likelihood that a given article contains false information. Machine learning complements NLP by enabling systems to learn from data without being explicitly programmed for every possible scenario. In fake news detection, machine learning models can be trained on labeled datasets containing examples of both true and false news articles.

These models can then identify key features that distinguish credible sources from unreliable ones. For example, a machine learning model might learn that articles from established news organizations tend to have a different writing style compared to those from less reputable sources. By continuously refining their understanding through exposure to new data, these models become increasingly proficient at detecting fake news.

Identifying Manipulative Tactics through AI

AI’s capabilities extend beyond mere detection; it also plays a crucial role in identifying manipulative tactics employed by purveyors of fake news. These tactics often include emotional appeals, sensationalism, and the use of misleading visuals or headlines designed to provoke strong reactions from audiences. By analyzing patterns in how information is presented, AI systems can uncover strategies that are commonly used to manipulate public perception.

For instance, AI can analyze the emotional tone of articles and social media posts to determine whether they employ fear-mongering or outrage-inducing language. Such analyses can reveal trends in how certain topics are framed, allowing researchers and journalists to understand the underlying strategies used by those spreading misinformation. Additionally, AI can track the dissemination patterns of specific pieces of content across social media platforms, providing insights into how manipulative narratives gain traction and spread virally.

Challenges and Limitations of AI in Detecting Fake News

Despite its potential, the application of AI in detecting fake news is not without challenges and limitations. One significant hurdle is the inherent complexity of human language and communication. Sarcasm, irony, and cultural references can pose difficulties for AI systems attempting to interpret meaning accurately.

As a result, there may be instances where AI misclassifies genuine content as fake or vice versa, leading to potential misinformation about what constitutes credible information. Another challenge lies in the availability and quality of training data. For machine learning models to be effective, they require large datasets that accurately represent both true and false news articles.

However, obtaining high-quality labeled data can be difficult due to the constantly evolving nature of misinformation tactics. Furthermore, biases present in training data can lead to skewed results, where certain types of content are unfairly flagged as fake or legitimate based on historical patterns rather than objective criteria.

Ethical Considerations in AI-Based Fake News Detection

The deployment of AI in fake news detection raises several ethical considerations that must be addressed to ensure responsible use of technology. One primary concern is the potential for algorithmic bias, where AI systems may inadvertently reinforce existing prejudices or stereotypes present in their training data. This bias could lead to disproportionate targeting of specific groups or viewpoints while overlooking others, ultimately undermining the goal of promoting accurate information.

Moreover, there is a delicate balance between censorship and free speech when it comes to moderating content online. While AI can help identify misleading information, there is a risk that it may also suppress legitimate discourse or dissenting opinions under the guise of combating fake news. Striking this balance requires careful consideration of the criteria used for flagging content and ensuring transparency in how decisions are made regarding what constitutes misinformation.

Case Studies of AI Successfully Detecting Fake News

Several case studies illustrate the successful application of AI in detecting fake news across various contexts. One notable example is Facebook’s use of AI algorithms to combat misinformation on its platform. The company has implemented machine learning models that analyze user-generated content for signs of falsehoods.

When an article is flagged as potentially misleading, it is subjected to further review by human fact-checkers who assess its accuracy before determining whether it should be labeled or removed. Another compelling case study involves the work done by researchers at MIT who developed an AI system capable of identifying fake news articles with remarkable accuracy. Their model utilized a combination of linguistic features and social media engagement metrics to assess credibility.

In tests conducted on a dataset of over 1 million articles, the system achieved an accuracy rate significantly higher than traditional methods used for fact-checking. This success underscores the potential for AI-driven approaches to enhance our ability to combat misinformation effectively.

Future Developments in AI for Fake News Detection

As technology continues to evolve, so too will the capabilities of AI in detecting fake news. Future developments may include more sophisticated algorithms that incorporate contextual understanding and real-time analysis of emerging trends in misinformation. For instance, advancements in deep learning could enable AI systems to better grasp nuances in language and detect subtler forms of manipulation that current models may overlook.

Additionally, collaborative efforts between tech companies, researchers, and policymakers will be essential in shaping the future landscape of AI-driven fake news detection. By sharing best practices and developing standardized frameworks for evaluating content credibility, stakeholders can work together to create a more informed public discourse while minimizing the risks associated with misinformation. As society navigates this complex terrain, ongoing research and innovation will play a critical role in harnessing AI’s potential to safeguard truth in an increasingly digital world.

In the ongoing battle against misinformation, understanding how AI detects fake news and online manipulation is crucial. For those interested in exploring the broader implications of technology in communication, the article on conversational commerce provides insights into how AI is shaping interactions in the digital marketplace, which can also influence the spread of information and misinformation.

FAQs

What is fake news and online manipulation?

Fake news refers to false or misleading information presented as news, often intended to deceive readers. Online manipulation involves tactics used to influence public opinion or behavior through deceptive or biased content on digital platforms.

How does AI help in detecting fake news?

AI detects fake news by analyzing patterns in text, images, and videos using natural language processing, machine learning, and computer vision. It identifies inconsistencies, verifies sources, and cross-references information with trusted databases to assess credibility.

What techniques does AI use to identify manipulated content?

AI employs techniques such as deep learning models to detect altered images or videos (deepfakes), sentiment analysis to spot biased language, and network analysis to uncover coordinated disinformation campaigns.

Can AI completely eliminate fake news?

No, AI cannot completely eliminate fake news but significantly reduces its spread by flagging suspicious content and assisting human moderators. The evolving nature of misinformation requires continuous updates and human oversight.

What are the challenges AI faces in detecting fake news?

Challenges include the subtlety of misinformation, the use of sophisticated manipulation techniques, language nuances, and the risk of false positives or censorship. AI systems also require large, diverse datasets to improve accuracy.

How do AI systems verify the authenticity of news sources?

AI systems cross-check information against reputable databases, analyze the history and credibility of sources, and evaluate the consistency of reported facts to verify authenticity.

Is AI detection of fake news used by social media platforms?

Yes, many social media platforms integrate AI tools to detect and limit the spread of fake news and manipulated content, often combining AI with human review to enforce community standards.

How can individuals benefit from AI in combating fake news?

Individuals can use AI-powered fact-checking tools and browser extensions to assess the reliability of news articles and social media posts, helping them make informed decisions about the information they consume.