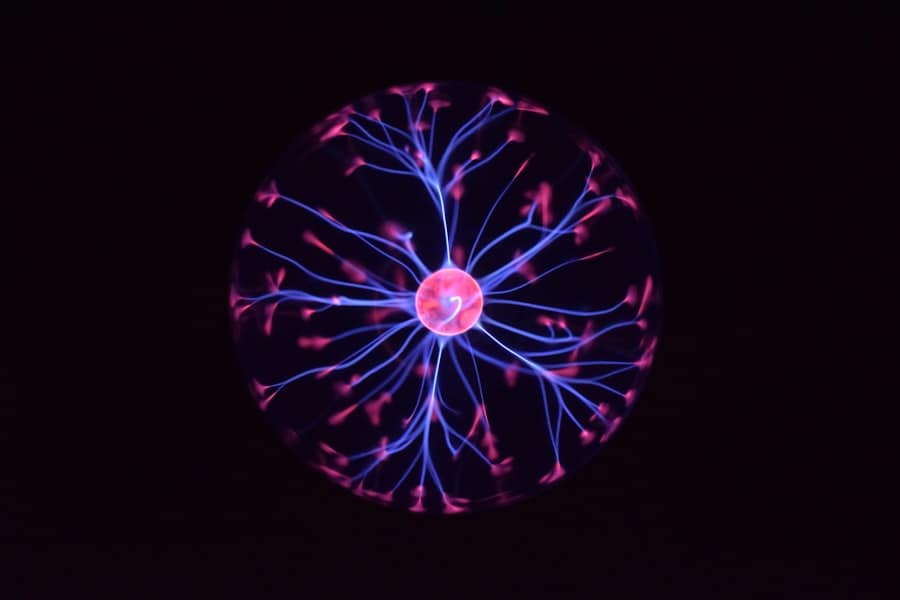

Neuromorphic computing represents a paradigm shift in the way we approach computation, drawing inspiration from the architecture and functioning of the human brain. Unlike traditional computing systems that rely on the von Neumann architecture, which separates memory and processing units, neuromorphic systems integrate these functions in a manner akin to biological neural networks. This integration allows for more efficient processing of information, particularly in tasks that require pattern recognition, sensory processing, and real-time decision-making.

The term “neuromorphic” itself was coined in the late 1980s by Carver Mead, who envisioned circuits that could mimic the neural structures of the brain. At its core, neuromorphic computing seeks to replicate the brain’s ability to process vast amounts of information with remarkable efficiency and low power consumption. This is achieved through the use of specialized hardware, such as spiking neural networks (SNNs), which communicate through discrete spikes of electrical activity rather than continuous signals.

These spikes are analogous to the action potentials in biological neurons, allowing for a more dynamic and event-driven approach to computation.

Key Takeaways

- Neuromorphic computing aims to replicate the brain’s neural architecture to enhance AI efficiency and processing.

- It offers advantages like low power consumption, real-time learning, and improved pattern recognition.

- Challenges include hardware complexity, scalability issues, and limited programming frameworks.

- Applications span robotics, sensory processing, autonomous systems, and adaptive AI models.

- Ongoing research focuses on overcoming limitations, with future prospects raising important ethical considerations.

Mimicking the Human Brain

The human brain is an incredibly complex organ, consisting of approximately 86 billion neurons interconnected by trillions of synapses. Each neuron can form thousands of connections with other neurons, creating a vast network capable of processing information in parallel. Neuromorphic computing aims to replicate this intricate structure and functionality by employing hardware that mimics the behavior of biological neurons and synapses.

This involves not only the design of circuits that can generate spikes but also the development of algorithms that can leverage these spikes for learning and decision-making. One of the key features of neuromorphic systems is their ability to learn from experience, much like humans do. This is often achieved through mechanisms such as spike-timing-dependent plasticity (STDP), where the strength of synaptic connections is adjusted based on the timing of spikes between connected neurons.

Such learning rules allow neuromorphic systems to adapt to new information and improve their performance over time. For instance, researchers have demonstrated that neuromorphic chips can learn to recognize patterns in sensory data, such as visual or auditory inputs, by adjusting their synaptic weights in response to stimuli. This capability not only enhances the efficiency of data processing but also enables more robust and flexible AI systems.

Advantages of Neuromorphic Computing for AI

One of the most significant advantages of neuromorphic computing lies in its energy efficiency. Traditional AI models, particularly those based on deep learning, often require substantial computational resources and energy consumption, especially during training phases. In contrast, neuromorphic systems can process information using orders of magnitude less power due to their event-driven nature.

This efficiency is particularly crucial for mobile and embedded applications where battery life is a concern. For example, neuromorphic chips can enable real-time processing in devices like drones or autonomous vehicles without draining their power sources. Moreover, neuromorphic computing offers enhanced speed and responsiveness in processing sensory information.

The brain’s ability to process inputs in parallel allows for rapid decision-making, which is essential in dynamic environments.

This capability is particularly beneficial in applications such as robotics, where quick responses to environmental changes are critical for safe and effective operation.

For instance, a neuromorphic chip could enable a robotic arm to adjust its movements in real-time based on visual feedback, enhancing its dexterity and precision.

Challenges and Limitations

Despite its promising advantages, neuromorphic computing faces several challenges and limitations that must be addressed for widespread adoption. One significant hurdle is the complexity of designing and fabricating neuromorphic hardware. Creating circuits that accurately mimic the behavior of biological neurons and synapses requires advanced materials and manufacturing techniques that are still under development.

Additionally, integrating these components into existing computing architectures poses further challenges, as traditional software frameworks may not be compatible with neuromorphic systems. Another limitation is the current state of algorithms designed for neuromorphic computing. While there has been progress in developing learning rules and models that leverage spiking neural networks, many existing AI algorithms are optimized for conventional architectures.

This mismatch can hinder the performance of neuromorphic systems when applied to tasks that require sophisticated learning techniques or large-scale data processing. Researchers are actively exploring new algorithms tailored specifically for neuromorphic hardware, but this area remains a work in progress.

Applications of Neuromorphic Computing in AI

| Metric | Description | Neuromorphic Computing | Traditional Computing | Impact on AI |

|---|---|---|---|---|

| Energy Efficiency | Power consumption per operation | ~0.1 picojoules per synaptic event | ~10-100 nanojoules per operation | Enables low-power AI applications, especially in edge devices |

| Latency | Time delay in processing neural signals | Sub-millisecond response times | Milliseconds to seconds depending on workload | Improves real-time AI responsiveness |

| Scalability | Ability to scale neuron and synapse counts | Millions of neurons and billions of synapses on-chip | Limited by Moore’s law and power constraints | Supports large-scale brain-inspired AI models |

| Computational Paradigm | Type of processing architecture | Event-driven, parallel, asynchronous | Clock-driven, sequential or parallel | More biologically plausible AI computations |

| Learning Capability | On-chip learning and adaptation | Supports local learning rules like STDP | Typically requires off-chip training | Enables adaptive and autonomous AI systems |

| Hardware Examples | Representative neuromorphic chips | IBM TrueNorth, Intel Loihi | GPUs, TPUs | Specialized hardware for efficient AI processing |

The potential applications of neuromorphic computing in AI are vast and varied, spanning fields such as robotics, healthcare, and environmental monitoring. In robotics, for instance, neuromorphic chips can enhance sensory processing capabilities, enabling robots to navigate complex environments more effectively. By mimicking the brain’s ability to process visual and auditory information rapidly, these systems can improve a robot’s ability to interact with its surroundings and make real-time decisions based on sensory input.

In healthcare, neuromorphic computing holds promise for advancing medical diagnostics and monitoring systems. For example, neuromorphic sensors could be employed to analyze physiological signals such as electrocardiograms (ECGs) or electroencephalograms (EEGs) in real-time. By leveraging their ability to detect patterns and anomalies quickly, these systems could assist healthcare professionals in diagnosing conditions or predicting adverse events before they occur.

Furthermore, the low power consumption of neuromorphic devices makes them suitable for wearable health monitoring applications that require continuous data collection without frequent recharging.

Current Developments and Research

Research into neuromorphic computing has gained significant momentum in recent years, with numerous academic institutions and tech companies investing resources into this field. Notable projects include IBM’s TrueNorth chip, which features over a million programmable neurons and is designed for low-power cognitive computing tasks. Similarly, Intel’s Loihi chip incorporates a self-learning capability through spiking neural networks, allowing it to adapt its behavior based on experience.

In addition to hardware advancements, researchers are also focusing on developing software frameworks that facilitate the programming and deployment of neuromorphic systems. Initiatives like NEST and Brian provide platforms for simulating spiking neural networks, enabling researchers to experiment with different architectures and learning algorithms. These tools are crucial for advancing our understanding of how neuromorphic systems can be effectively utilized in various applications.

Future Implications and Possibilities

The future implications of neuromorphic computing are profound, particularly as we continue to explore the intersection between biology and technology. As researchers refine neuromorphic architectures and algorithms, we may witness breakthroughs in AI capabilities that were previously thought unattainable. For instance, advancements in neuromorphic systems could lead to more sophisticated autonomous agents capable of navigating complex environments with minimal human intervention.

Moreover, as our understanding of the brain deepens through neuroscience research, we may uncover new principles that can be applied to enhance neuromorphic computing further. This synergy between neuroscience and computer science could pave the way for innovative approaches to problem-solving across various domains, from climate modeling to personalized medicine. The potential for creating machines that not only mimic human cognition but also exhibit adaptive learning behaviors opens up exciting avenues for research and application.

Ethical Considerations and Concerns

As with any emerging technology, neuromorphic computing raises important ethical considerations that must be addressed as it develops. One primary concern revolves around the implications of creating machines that mimic human cognitive processes. The potential for such systems to make autonomous decisions raises questions about accountability and transparency.

If a neuromorphic system were to make a decision that results in harm or unintended consequences, determining responsibility could become complex. Additionally, there are concerns regarding privacy and data security when deploying neuromorphic systems in sensitive areas such as healthcare or surveillance. The ability of these systems to process vast amounts of personal data raises questions about how this information is collected, stored, and used.

Ensuring that ethical guidelines are established and adhered to will be crucial as we navigate the integration of neuromorphic computing into society. In conclusion, while neuromorphic computing holds immense potential for revolutionizing artificial intelligence through its brain-inspired architecture and efficiency, it also presents challenges that must be carefully managed. As research continues to advance in this field, it will be essential to balance innovation with ethical considerations to ensure that these technologies benefit society as a whole.

In the realm of artificial intelligence, the exploration of neuromorphic computing presents exciting possibilities for enhancing computational efficiency and mimicking human brain processes. For those interested in the intersection of technology and career opportunities, a related article on the best-paying jobs in tech for 2023 can provide valuable insights into how advancements in fields like neuromorphic computing may influence job markets and skill demands. You can read more about it in this article: Discover the Best Paying Jobs in Tech 2023.

FAQs

What is neuromorphic computing?

Neuromorphic computing is a type of computing architecture designed to mimic the neural structure and functioning of the human brain. It uses specialized hardware and algorithms to replicate the way neurons and synapses process information, aiming to improve efficiency and performance in tasks such as pattern recognition and learning.

How does neuromorphic computing differ from traditional computing?

Traditional computing relies on the von Neumann architecture, which separates memory and processing units, leading to bottlenecks in data transfer. Neuromorphic computing integrates memory and processing in a way that resembles biological neural networks, enabling parallel processing and lower power consumption.

What are the potential benefits of neuromorphic computing for AI?

Neuromorphic computing can offer significant advantages for AI, including faster processing speeds, reduced energy consumption, improved real-time learning capabilities, and enhanced ability to handle unstructured data. These benefits make it suitable for applications like robotics, autonomous systems, and sensory data analysis.

What types of hardware are used in neuromorphic computing?

Neuromorphic hardware typically includes specialized chips such as spiking neural network processors, memristors, and analog circuits designed to emulate synaptic activity. Examples include IBM’s TrueNorth chip and Intel’s Loihi processor.

Is neuromorphic computing widely used in current AI applications?

While neuromorphic computing is a promising field, it is still largely in the research and development phase. Some experimental applications exist, but widespread commercial adoption is limited due to challenges in hardware design, programming models, and integration with existing AI frameworks.

What challenges does neuromorphic computing face?

Key challenges include developing standardized programming tools, creating scalable hardware architectures, ensuring compatibility with existing AI algorithms, and overcoming manufacturing complexities. Additionally, understanding how to best leverage neuromorphic systems for various AI tasks remains an active area of research.

Can neuromorphic computing improve energy efficiency in AI systems?

Yes, one of the main advantages of neuromorphic computing is its potential to significantly reduce energy consumption compared to traditional AI hardware, by mimicking the brain’s efficient processing methods and minimizing data movement.

What future developments are expected in neuromorphic computing?

Future developments may include more advanced neuromorphic chips with higher neuron and synapse counts, improved integration with machine learning frameworks, and broader adoption in edge computing devices. Research is also focused on enhancing learning algorithms that can fully exploit neuromorphic architectures.